Search is changing faster than most businesses can keep up with. The old game of chasing rankings through keywords is being replaced by something bigger: AI assistants that think, talk, and act for users. This shift is what we call Generative Engine Optimization, or GEO. It’s about preparing your content and strategy for a world where the “search box” barely exists, but discovery still drives growth.

Rethinking Discovery: AI-First Search and the Rise of GEO

Search is going through a strange transformation – the kind that doesn’t have a clear before and after. It’s no longer just about typing words into a box and scrolling through a list of links. That era is mostly behind us.

What we’re left with today is a cluttered version of Google packed with AI blur, non-stop ads, snippets that answer your question before you ever click, and a shrinking slice of true organic results. Meanwhile, platforms like ChatGPT and AI Mode don’t treat search as a destination at all – it’s just one function inside a broader assistant experience.

Looking ahead, the shift is even more dramatic. People may stop “searching” in the way we’ve known. Instead of opening a search engine, they’ll just ask their AI assistant – and let it quietly do the digging for them. This change isn’t years away – it’s already unfolding. With tools like Google’s Project Mariner and Astra, along with major moves from OpenAI, Amazon, and Meta, we’re stepping into the next phase of discovery: one that’s AI-first and powered by what we now call Generative Experience Optimization (GEO).

From Rankings to Relevance: NUOPTIMA GEO Approach

At NUOPTIMA, we don’t just talk about the AI shift – we build for it. Generative Engine Optimization (GEO) isn’t a trend we’re chasing. It’s a playbook we’ve written through hands-on experimentation and proprietary research. While SEO aims to win visibility through rankings, GEO is about becoming the answer itself – the content AI pulls when it responds to a user’s question.

Our team is built around that shift. We create authoritative, AI-readable content, map high-intent queries, and track the metrics that actually matter in this new search landscape – things like assistant citations, direct traffic from AI engines, and lead flow driven by smart content positioning. It’s not theory. It’s execution backed by results.

We’re not hard to find either. We share research openly, and we’re always up for a conversation – you can connect with us on LinkedIn.

AI-Led Discovery and the Realities of GEO

GEO, in its advanced form, is less about rules and more about managing probabilities. Nothing stays fixed for long. The systems that power AI search are still in flux – what works today might quietly break tomorrow.

Here’s the kind of landscape we’re navigating:

- One day, AI crawlers can’t process JavaScript – the next, they suddenly can.

- One week, OpenAI is leaning on Google and Bing’s search indexes – the next, it’s quietly spinning up its own.

- One moment, your website is your content’s primary home – the next, it’s just a data source for an AI’s memory.

There’s no stable ground. But we’re starting to see patterns. And if you’re paying attention, those signals can help shape the way you prepare your content – and your business – for what’s coming next.

Search Is Leaving the Keyboard Behind

One of the biggest shifts in how we find information is happening quietly – we’re moving past typing. While we’ve spent decades glued to keyboards, typing has always been a bit of a bottleneck. It’s not how we think. It’s slower than speaking, pointing, or just… looking.

That’s where voice, visual, and even embodied search come in. These aren’t futuristic ideas anymore – they’re already reshaping how people interact with AI. You might talk to an assistant, show it what you’re seeing, or use a wearable device that constantly interprets the world around you in real time.

Voice That Understands, Not Just Responds

Voice search has been the punchline in SEO circles for years, but let’s be honest – it’s everywhere already. Siri, Alexa, Google Assistant – they’re in people’s homes, pockets, and cars. What’s changed is that the next generation of AI is making voice interfaces way more intelligent and far more useful.

Take Google’s Project Astra. It’s not just voice search – it’s full-on dialogue. It understands different accents, responds across 24 languages, and holds a conversation in real time. That’s a different ball game.

This new generation of voice tech makes things feel almost effortless. You can ask layered, open-ended questions like you’re talking to someone who actually understands you – and get an answer right away, spoken back with context. Amazon’s updated Alexa+ takes it even further. It’s not just reactive – it’s conversational. You can plan your schedule, hunt for products, or just chat through ideas, all without lifting a finger.

When voice is powered by large language models, search stops feeling like a command line and starts to feel more like bouncing ideas off a smart friend.

Seeing Is Searching

Visual search is heading in the same direction. We’re already at a point where you can point your phone – or a pair of smart glasses – at something and just ask what it is. Google’s been pushing hard here: Lens can already recognize objects, read text from images, and translate signs in real time. But with Project Astra, things get more dynamic.

Using Astra in “Live” mode, you can hit a button and ask about anything in your camera feed – the AI sees what you see and responds instantly. Ask about a building, a product, even a recipe you’re cooking – and it starts talking back with answers in real time.

Discovery is no longer locked to screens and search bars – it’s starting to happen out in the real world. Picture this: you glance at a building, and your smart glasses tell you its backstory. Or you scan a product on a shelf, and your AI pulls up reviews, price comparisons, maybe even alerts you to a better deal nearby. Search is starting to work through sight, not just text.

What Embodied Search Looks Like

This is what embodied search looks like. It’s not just about voice or visuals – it’s about your assistant being aware of your surroundings. Devices like AR glasses are making that possible. Google’s prototype, which grew out of its Project Astra work, can view the world from your perspective and layer helpful info right onto what you’re seeing – in real time.

In this setup, the AI isn’t some tool you call on – it’s more like a co-pilot moving with you. It can describe what’s in front of you, guide you through a task, or even step in with support when needed. Think of a visually impaired user getting obstacle alerts while walking, or someone cooking a new recipe getting hands-free ingredient instructions on the fly. It’s search that shows up in the moment – without needing to be asked twice.

All of these new ways to interact – talking, showing, scanning – are pushing search engines into unfamiliar territory. They’re being forced to understand more than just text.

To keep up, search systems now need to interpret images, video, sound, and even 3D inputs with the same fluency as language. For SEOs and marketers, that’s a real shift. It’s no longer enough to optimize headlines and metadata. Now you’ve got to think about how your content performs when it’s read aloud, seen through a lens, or layered into AR. If an AI assistant can’t access it without a screen, you risk being skipped over entirely.

And the big players are already doubling down on this. Google’s pushing visual search with Lens and Project Astra. Amazon’s making Alexa smarter behind the scenes, letting it move through web pages and apps on your behalf. Meta’s been experimenting with search that runs through its messaging apps – think Instagram or WhatsApp – where you might ask your AI to find a place to eat nearby, right inside a chat thread.

Search isn’t just typed anymore. It’s spoken, shown, and sensed. The devices around us – phones, glasses, wearables – are quietly becoming the new front doors to search. Less friction, more flow.

Hyper-Personalized Search: When AI Knows You Too Well

Let’s be honest – how much of your personal data are you really willing to hand over for smarter, more “helpful” search results?

This is one of the big trade-offs coming fast. Future search is all about precision, and that precision depends on how well the AI knows you. We’re moving beyond simple location targeting or remembering your last few queries. Now, search engines are building full user profiles – pulling from your preferences, behaviors, past chats, and even your inbox – to generate answers that feel like they were written just for you.

And no one’s in a better position to do this than Google. With years of data from Search, Gmail, Maps, YouTube, and Android, it already knows more about your digital life than most people are comfortable admitting. And despite pressure from regulators, there’s no sign that grip is loosening anytime soon.

This level of personalization changes how queries work. Ask something simple like, “What should I make for dinner?” – and the AI might remember that last week you binged BBQ recipes, watched a Kansas City pitmaster on YouTube, and left a five-star review for a spicy sauce. Instead of generic suggestions, it could recommend a plant-based version of a dish you’d probably love, pulled from a video it already knows you’ll watch.

Convenient? Absolutely. Creepy? Depends who you ask.

This kind of hyper-personalization isn’t a side effect – it’s the goal. Google’s Project Astra, for example, is designed to learn your habits, preferences, and past choices so it can shape responses around what actually matters to you. It remembers if you’ve got dietary restrictions, if you tend to favor certain brands, or if you’ve already shown interest in a product. You’re not just getting a search result – you’re getting an answer that aligns with your style.

Who Else Is Building This?

OpenAI’s also deep in this space. Since 2024, ChatGPT has used custom instructions and memory to adapt to individual users over time. It picks up on:

- Your tone and phrasing.

- Favorite topics.

- The way you write.

So when two people ask the same question, they can get very different replies. If you’ve shown a preference for open-source tools when researching CRMs, for instance, ChatGPT remembers that and filters future search results to match. It’s less like querying a database and more like consulting a research assistant that knows your playbook.

Alexa+ from Amazon is pushing the same idea at home. It remembers:

- Purchase history.

- Music taste.

- News sources.

- Even family birthdays (if you’ve told it).

Say you ask for a family dinner idea: Alexa won’t just throw out random recipes. It’ll take into account your daughter’s vegetarian diet, your partner’s gluten sensitivity, and suggest something everyone can actually eat. Search results become more like tailored actions – a personal concierge that’s always in the loop.

Meta, meanwhile, is sitting on a massive pool of personal data from its platforms – and it’s starting to put that data to work. Reports suggest they’re building an AI-powered search engine that plugs directly into their ecosystem. That means:

- If you’re using WhatsApp or Instagram, Meta’s AI could tap into your social graph, browsing habits, and engagement history.

- Restaurant suggestions might be based on where your friends went.

- News could be tuned to the pages you follow.

It’s the kind of personalization that feels seamless when it works – but comes with trade-offs. The convenience is huge. The privacy concerns are, too. Still, this is the direction things are heading. In the near future, users won’t just expect relevant answers – they’ll expect answers that feel like they were written specifically for them.

For SEOs and content teams, this flips the playbook. You’re no longer optimizing for a broad “average user.” You’re trying to land content in front of an AI that’s filtering everything through a personal lens – one user at a time. That means creating content that speaks clearly to niche needs, specific behaviors, or well-defined personas. Generic content will fade into the noise.

It also raises the stakes for trust. If users are going to let assistants this deep into their personal lives, brands need to be worthy of that trust. But if you get it right – if your content aligns with the right profile, at the right moment – AI search becomes less of a ranking game and more of a one-on-one recommendation engine. One result, built just for them.

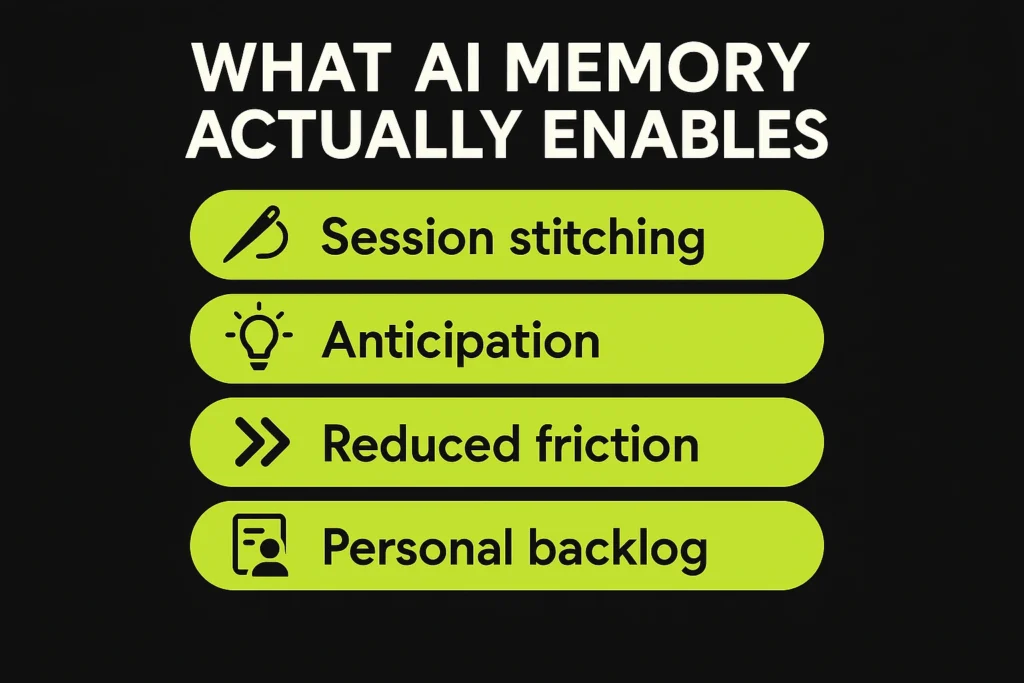

AI Memory: When Search Stops Forgetting

Personalization is only half the story. The other half is memory – and it’s quietly becoming a core feature of how AI search evolves.

Traditional search engines start fresh every time. Ask a question, get results, close the tab, and that’s the end of it. But AI assistants don’t work like that. They’re learning to remember. Future AI will keep context not just within one session, but across all of them – across devices, even across weeks or months.

We’re already seeing the first signs. ChatGPT, for instance, can hold a conversation that builds on itself, remembering what you said five prompts ago. But that’s just session-level context. What’s coming next is deeper and longer-term. Google’s Project Astra is already testing this kind of continuity – memory that follows you from phone to smart glasses, that remembers what you asked yesterday or last month, and brings that knowledge into the next interaction.

It’s the difference between asking a question and having an ongoing conversation. You might say, “What was that Thai spot I looked up a few weeks ago?” and your assistant will know exactly what you meant. Because it remembers what you’ve searched for, what you skipped, and what you nearly booked. It’s not just smart – it’s paying attention.

What AI Memory Actually Enables

- Session stitching: Persistent memory that carries across apps, devices, and time.

- Anticipation, not reaction: AI can act before you ask, based on prior behavior.

- Reduced friction: No more repeating yourself across sessions or apps.

- Personal backlog recall: AI surfaces prior searches or skipped results when they become relevant.

- Deeper personalization: Recommendations that evolve as your preferences shift.

- Context-aware automation: Remembering unfinished tasks and nudging you later.

From Memory to Momentum: How AI Starts Acting on What It Remembers

When AI remembers, it doesn’t just respond – it starts to anticipate. This is where things get interesting. Instead of waiting for you to ask something, it can step in before you even realize you need help.

Amazon’s already hinting at this shift with Alexa+. It might prompt you to leave earlier if there’s traffic on the way to your meeting, or notify you that the noise-cancelling headphones you looked at last week just dropped in price. It’s search turning into support – and it’s happening in real time.

Google’s moving the same way. Sundar Pichai has outlined a vision where AI assistants track long-term memory across sessions, tasks, and devices. So if you’ve been planning a trip and come back a month later asking to book the same destination for spring, your assistant won’t need the full backstory – it already knows.

This kind of memory means users won’t need to keep repeating themselves. The assistant picks up where you left off – whether that’s a shopping query, a long project, or just planning dinner. It turns search into something continuous – less about answers in the moment, more about understanding over time.

And for content teams, this shift changes the rules. A user might land on your page once, but that interaction could echo long after. If an AI assistant stores that information – a guide, a review, a product detail – it can resurface it later when it’s actually needed. Not when someone searches, but when the context fits.

That opens up a new kind of opportunity: proactive content. Maybe someone asked about car maintenance months ago – now it’s time for an oil change, and the AI delivers a reminder, complete with a helpful snippet from your brand’s site. That moment didn’t come from search intent. It came from memory.

In this model, relevance isn’t just about keywords – it’s about timing, context, and trust. If your content is worth remembering, the AI might bring it back into play. That’s a whole different game from the one we’ve been playing with today’s forgetful search engines.

Making AI Systems Talk: Why MCP Matters

As AI assistants start handling more complex tasks, they can’t operate in silos. They need to grab data from different places, use external tools, and even collaborate with other AI systems. That’s where the Model Context Protocol (MCP) comes in – a new standard designed to help different AIs speak the same language and share what they know.

Think of MCP as the middleware that lets AI tools sync up. It allows assistants to pull live context from other apps, databases, or content systems – and actually make use of it in real time. That’s a big deal for anything related to search or task execution. Instead of giving static, generic answers, an AI could tap into your company’s knowledge base, your project management tools, or even your internal dashboards to give a tailored response.

From Static Answers to Live Integration

The protocol itself was introduced by Anthropic to break through the typical walled-garden setup most AI systems are stuck in. Normally, integrating an AI with your tools takes custom development. MCP aims to standardize that process. With it, an assistant can securely plug into a range of tools and carry context across them.

- Notion: to retrieve real-time documentation, meeting notes, or product specs.

- Jira: to check issue statuses, sprints, blockers, or deployment progress.

- Google Drive: to pull the latest spreadsheets, reports, or working drafts.

- Slack: to understand recent team discussions or unresolved questions.

- CRMs or analytics dashboards: to fetch customer data, usage metrics, or key KPIs.

Ask, “What’s the status of Project X?” and instead of guessing or giving a vague answer, your assistant could check your team’s Notion, Jira, or Google Drive and bring back the actual update – with context baked in.

MCP isn’t about flash. It’s infrastructure – the kind that makes AI work smarter behind the scenes. And for anyone building search-ready content or assistant-enabled services, it’s a signal that the future isn’t just about visibility. It’s about being part of the ecosystem AI pulls from when it needs something reliable.

The Bigger Picture: Continuous Context and Open Ecosystems

What makes MCP particularly interesting isn’t just that it connects systems – it does so in both directions, and it remembers. The assistant doesn’t just grab data on demand; those connected tools can actively send updates back. That gives the AI a live, constantly evolving context to work from.

Picture asking, “What should I do today?” Your AI could pull your meetings from Calendar, check the weather for your location, surface a breaking industry headline – and tie it all together in a single, personalized answer. That kind of synthesis becomes possible because MCP keeps the assistant plugged into everything – not just once, but continually.

This dynamic memory and tool access across systems completely changes the search experience. You’re no longer dealing with disconnected queries and static results. You’re tapping into an assistant that understands the bigger picture and adapts to it in real time.

There’s also a bigger play here – open standards like MCP stop us from ending up with dozens of locked-in, siloed AI ecosystems. It means your Google assistant could talk to your car’s AI, or your company’s internal bot, and they’d all share relevant context without needing custom integrations.

Down the line, we’re likely to see agents that can delegate across networks. Your personal AI might pass off a legal query to a specialized research agent, wait for the findings, and then wrap the results into a clean summary – without you ever needing to coordinate it manually. That’s where Google’s A2A (Agent-to-Agent) protocol comes in – we’ll get to that next – but the key distinction is this: MCP is about linking data and tools inside a single agent’s workflow. A2A is about agents collaborating across boundaries.

Used together, they unlock something much bigger: a world where AI agents don’t just answer questions – they orchestrate entire tasks behind the scenes, pulling from multiple tools and sources, all on your behalf.

AI Agents That Talk to Each Other: Google’s A2A Protocol

To make AI assistants smarter and more useful, Google is building the plumbing that lets them work together – not just with tools, but with each other. This new protocol, called A2A (Agent-to-Agent), rolled out in early 2025 as an open standard designed to let different AI agents collaborate across systems and providers.

Where MCP connects one assistant to data or tools, A2A links up entire agents – even if they’re built by different companies. It’s like bringing in a team of experts instead of relying on a single generalist. And it means complex tasks can be broken up, delegated, and executed in parallel, behind the scenes.

How A2A Works: From Delegation to Execution

Here’s what that looks like in practice: Say you tell your AI, “Plan my weekend trip and book everything I’ll need.” That’s not one task – it’s a chain. Your assistant could reach out to a travel agent AI to find and book flights and hotels. At the same time, it could ping a local restaurant agent to grab dinner reservations. Maybe even loop in a deals-focused agent to apply any discounts. Each of these agents works on its part, sends updates back, and your main assistant assembles the pieces into one clean itinerary.

A2A makes all of that possible by creating a shared protocol. Agents advertise what they can do, send and receive structured “tasks,” and exchange context or results – called “artifacts” – in a way that makes collaboration frictionless.

This isn’t just about smarter search. It’s about building AI ecosystems that can reason, divide labor, and deliver full solutions instead of isolated answers. And it’s coming fast.

Google’s push for A2A makes one thing clear: no single AI model is going to master everything. Instead of trying to build one mega-assistant that does it all, the future looks more like a network of specialized agents – each handling what it’s best at, and working together when needed.

A2A and the Future of Search & Content

For search, this is a game-changer. Complex queries – the ones that span multiple tasks – can now be split and delegated. Say you ask, “Help me buy a house, negotiate the price, and set up internet.” That’s three separate workflows. A2A lets your main assistant loop in a real estate agent AI, a legal contracts AI, and an internet setup AI – each doing their part, then passing results back for one cohesive response.

The protocol gives them a shared language and structure to work within – how to find each other, how to communicate updates, how to return information in compatible formats. It’s collaborative search, powered by automation.

And it’s not just theory. Google’s already pulled in 50+ partners – software companies, startups, platforms – to help build this out. It’s being stewarded as an open initiative by The Linux Foundation, which means this isn’t a walled garden. It’s being built to scale.

Soon, we’ll see assistants that break down a question like “How can I grow my online presence?” by pulling insights from a content strategy agent, an SEO agent, and a web analytics agent – each with its own data, tools, and logic – then stitching the output into one clear answer. You won’t see the handoff. You’ll just get the insight.

The bigger picture? This breaks down platform silos. Your Apple AI could pass a request to a Google or OpenAI agent when it makes sense – and vice versa. Your car’s assistant might ask your home assistant to check something on your work computer. All that coordination happens through A2A.

If you’re in marketing or tech, here’s what that means: your content won’t just be consumed by people anymore. It’ll be consumed by agents – and those agents need clean APIs, structured data, and accessible endpoints. If your product or content isn’t A2A-ready (or MCP-ready), it may not even be in the running when the AI builds a response.

This is SEO evolving into something deeper. Less about ranking – more about being useful, accessible, and machine-readable at scale.

Multimodal Search Is Here – and Everyone’s Racing to Own It

Every major player is rethinking search through the lens of AI assistants – and the vision isn’t just about better answers. It’s about agents that act, understand context, and move across modalities.

Google’s taking the lead with a layered approach. Its Gemini model is powering everything from AI Mode to experimental projects like Astra and Mariner. Mariner is especially telling: it’s not just search anymore – it’s action. Instead of clicking around websites, users can now tell an assistant to buy tickets or handle a grocery order, and the agent takes care of the steps in the background. Google’s framing this as a fundamental shift: we’re not searching websites – we’re delegating tasks to agents.

Right now, Mariner is still in testing with early users through an “AI Ultra” subscription, but it’s on track to roll into AI Mode soon. Astra, on the other hand, is injecting real-time intelligence into how Google understands the world – whether it’s answering a question about something in your camera view or enhancing live voice and video interactions. Between Gemini Live and the upcoming “Agent Mode” in Chrome, it’s clear Google sees search evolving into something immersive – where asking, browsing, and doing all blend into one flow, driven by AI.

OpenAI, Microsoft, and Persistent Agents

OpenAI, closely tied to Microsoft, is coming at the same problem from a slightly different angle – conversation first. ChatGPT’s Search mode combines live web browsing with natural dialogue and citations, turning the chat window into a fluid, interactive search layer. But it’s not stopping there.

With its Operator agent prototype, OpenAI is building toward agents that don’t just find information – they handle full tasks. Think: submitting forms, making reservations, finalizing purchases – all done in the background. In 2025, they launched “Agents” as a core feature: AI entities you can customize to act on your behalf. These agents retain memory, understand your voice or brand, access specific datasets, and run persistent workflows – even when you’re not actively engaging with them.

What these persistent agents bring to the table:

- Always-on context: remembering past requests, preferences, and styles

- Automated workflows: tasks initiated without manual step-by-step instruction

- Tailored insights: delivering pointed suggestions rather than generic ones

Meta, Microsoft, Apple – and the Startup Wildcards

Meta isn’t sitting on the sidelines. In 2025, under Zuckerberg’s direction, the company launched Meta Superintelligence Labs – a high-stakes moonshot aimed at pushing artificial superintelligence forward. And they’re going after the industry’s best with serious firepower: billion-dollar infrastructure budgets, multi-million-dollar compensation offers, and a recruiting list that reads like an AI all-star draft.

Some of the names they’ve landed? Shengjia Zhao, one of the minds behind ChatGPT, now serving as chief scientist. Then there’s Matt Deitke – just 24 years old and reportedly brought in with a $250 million package. Zuckerberg, according to insiders, is keeping a literal list of top AI researchers he wants – and making offers big enough to shake the industry. It’s already working. OpenAI, for example, has responded with aggressive retention bonuses and equity bumps to hold onto key people.

But Meta’s not alone. Microsoft’s been in the game early through its OpenAI partnership, turning Bing into an AI-first search engine well before others caught on. Bing’s chatbot, with real-time answers and sourced citations, arguably pushed Google to roll out SGE. Microsoft’s also baking Copilot into Windows itself – embedding search right into the OS.

Apple, as usual, is keeping quiet, but leaks point to major moves: custom LLMs, possible AI search offerings, and a long-term play in embodied search via hardware like the Vision Pro headset.

And then there are the fast-moving startups – Perplexity, Neeva (now part of Snowflake), and others – all experimenting with AI-first discovery that skips the traditional web entirely in favor of direct answers and trusted summaries.

Search Isn’t a Feature Anymore – It’s the Interface

No one owns the future of search outright. But it’s clear what direction things are heading: search will be everywhere, integrated into everything, and powered by assistants. Whether it’s Google’s task agents, OpenAI’s conversational logic, Amazon’s voice-driven commerce, or Meta’s context-rich social graph – they all point to the same conclusion.

Search is no longer a static list of links. It’s becoming a live, contextual dialogue. And for creators, brands, and marketers, that changes the game. Getting “found” won’t be about ranking #1 – it’ll be about becoming the trusted answer AI reaches for. Or the tool it picks to complete a task.

The search box is evolving into something bigger – a system that doesn’t just find, but thinks and acts on your behalf. And the time to start building for that future? It’s already here.

FAQ

GEO – Generative Engine Optimization – is about preparing content for AI-first discovery, not just search engines. With SEO, you’re optimizing for traditional web rankings. GEO is about making sure your brand, product, or insights show up in the answers AI assistants generate – whether that’s voice, chat, or embedded across devices. It’s not a replacement for SEO, but it’s definitely a shift in how visibility works.

Not quite – but it does mean keywords aren’t the whole story anymore. AI doesn’t just scan for exact matches. It looks at meaning, intent, and context. So yes, keywords still matter, but they need to live inside content that actually answers questions, solves problems, and reflects how people naturally speak.

Absolutely – and in some ways, GEO levels the playing field. AI assistants prioritize helpful, relevant content. If your answer is better, it gets surfaced – even if you’re not the biggest name in the space. That said, being visible still requires smart structuring, clear signals, and accessible data. It’s not magic.

Structured data matters more than ever. AI agents don’t just “read” like humans – they parse, interpret, and connect. Giving them clean, structured content – whether that’s through schema markup, APIs, or accessible formats – increases your chances of being selected, referenced, or acted on.