In the old SEO world, attribution was simple to trace. A person typed in a keyword, your site showed up, they clicked, and you could see the entire path in analytics. It was a clean, linear flow.

Generative AI search changes that. The typed query is only the spark. Behind the scenes, the system branches into subqueries, looks up entities, and ranks sources before stitching together the final answer.

What users see is the polished summary – usually a compact paragraph with citations. What stays invisible is the scaffolding: the query expansions, entity checks, and ranking layers working in the background. That’s the space where Generative Engine Optimization operates. And if you’re not mapping it, you’re essentially working blind.

How Fan-Out Shapes AI Search

In Google’s AI Overviews and AI Mode, what the user types is just the seed. The system quietly breaks that input into smaller parts: entities, intent, time ranges, and modifiers. Each of those parts can be expanded or swapped out to create new subqueries that go well beyond the original phrase.

Say someone searches for “best running shoes for marathons 2025.” On the surface, it’s a short request. Internally, the system might parse it like this:

- Entity: running shoes

- Attribute: marathon performance

- Timeframe: 2025 models

- Modifier: “best” → comparison or ranking intent

From there, the retrieval engine can spin off alternatives such as:

- “lightweight marathon shoes 2025 reviews”

- “Nike vs Adidas marathon shoes comparison”

- “running shoe cushioning for long-distance races”

- “top shoes for Boston Marathon training”

- “carbon plate running shoes 2025”

Some of these will hit the main search index, others might pull structured data from product catalogs, race forums, or even YouTube reviews. Together, this branching process, known as fan-out, allows the AI to cover the original intent from multiple directions.

GEO takeaway: Never treat the user’s typed query as the full picture. AI will generate variations, so your content should address both the explicit question and the hidden angles the system is likely to explore.

How We Help Brands Navigate Fan-Out at Nuoptima

At Nuoptima, we work in the same hidden layer of AI search that fan-out takes place. When search systems spin off subqueries and shuffle entities, we make sure your brand isn’t left out of the conversation.

We do this by combining technical SEO, content strategy, and link acquisition with a focus on what AI engines actually retrieve and cite. It’s not just about ranking for the obvious keywords – it’s about preparing your content for the synthetic queries and entity connections that drive real visibility.

What We Focus On:

- Mapping how queries branch into hidden subqueries

- Optimizing content for entity-based retrieval

- Building authority with high-quality backlinks

- Technical SEO improvements for performance and trust

- International and multilingual SEO strategies

Our approach is grounded in data. We map how queries expand, analyze which entities anchor retrieval, and create content that’s structured to surface across both explicit searches and AI-generated variations. Whether it’s technical SEO fixes, high-authority backlinks, or multilingual optimization, our goal is the same: to make sure your content shows up where AI systems are pulling answers.

We’ve seen this approach work across SaaS, eCommerce, healthcare, and beyond. For our clients, that has meant stronger fundraising outcomes, higher ROAS, and sustained growth in markets where traditional SEO signals alone aren’t enough.

When fan-out defines the game, we help you play it on your terms.

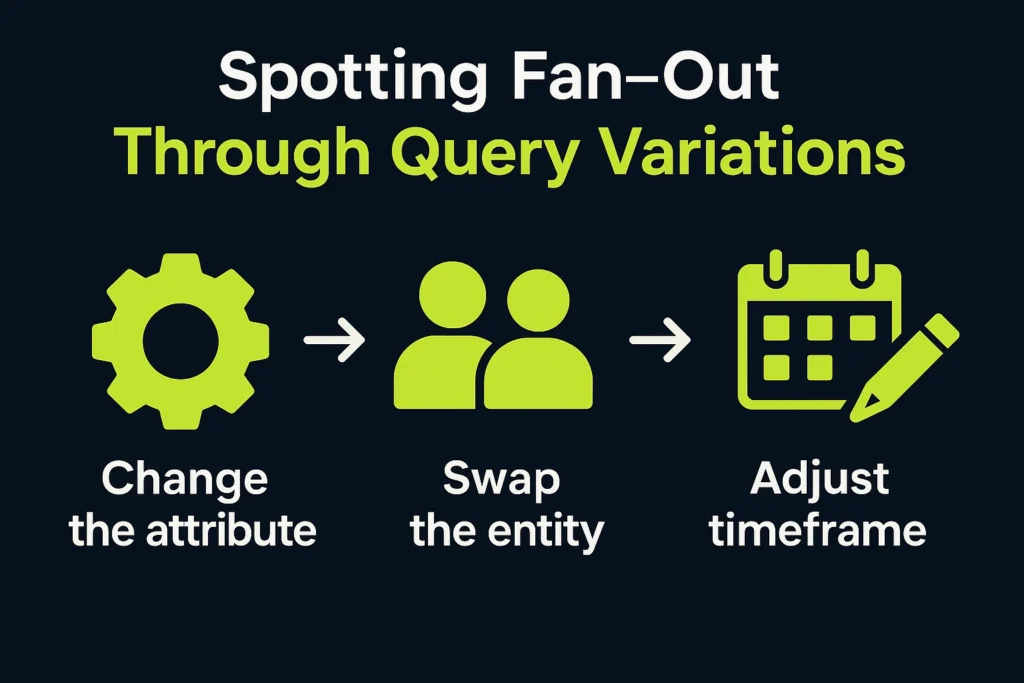

Spotting Fan-Out Through Query Variations

We can’t peek inside Google’s system to see the hidden subqueries it generates, but we can get close by testing how the output changes when we adjust the input. This process is often called query variation testing.

Start with a base search, for example, “best running shoes for marathons 2025.” Then, create small variations to see how results shift:

- Change the attribute → “lightweight marathon shoes 2025”

- Swap the entity → “Nike vs Adidas marathon shoes”

- Adjust the timeframe → “best running shoes for marathons 2024”

- Use synonyms → “top sneakers for marathon racing”

For each tweak, check whether an AI Overview appears and note which URLs are cited. Over time, you’ll notice that certain sites appear again and again, no matter how you phrase the query. Those recurring results are strong indicators of shared hidden subqueries driving retrieval.

By mapping overlaps across dozens of variations, you start to see clusters of intent: groups of pages that represent different branches of the fan-out process. You won’t capture every branch, since Google’s model is constantly adapting and freshness can reshuffle results, but even a partial map is highly valuable.

GEO takeaway: Query variation testing is your go-to method for uncovering hidden retrieval patterns. Pages that consistently show up across different versions of a query mark high-priority targets for your content strategy.

Rebuilding the Fan-Out Map

Once you’ve tested enough variations, you can move from spotting patterns to actually mapping them. Think of it as reverse-engineering how AI is branching out behind the scenes.

Let’s stay with our “best running shoes for marathons 2025” example. You’d start with that seed query and then spin out dozens of controlled variations: swapping brands, adding modifiers like “cushioning” or “lightweight,” or shifting time markers. Each time you run these searches, you log the AI Overview and record which URLs get cited.

The next step is looking at co-citations – how often two sources show up together across different variations. When you plot this data as a network graph, clusters start to appear. One cluster might focus on shoe technology and carbon plates, another on brand comparisons, and another on training advice for marathon runners.

These clusters are essentially the AI’s own way of grouping intent. For you, they reveal two important things:

- Which content themes consistently get retrieved for a given query set.

- Who your real competitors are in those citation “neighborhoods.”

GEO takeaway: Mapping co-citations gives you a clearer picture of the retrieval landscape. It shows both the themes AI systems trust and the competitive circles you’ll need to break into.

Getting Ahead of Fan-Out

Reverse engineering is useful, but it only shows you what the system is already doing. If you really want an edge, you have to look forward and predict how queries might expand before they actually do.

Here’s how that works. Take a seed query like “best running shoes for marathons 2025.” Instead of just testing variations by hand, you start by checking keyword graph data from tools such as Ahrefs or Semrush. These give you an idea of which searches tend to cluster together.

Then you push it further. Feed that seed into a language model and ask it to generate every angle a search engine might explore. The list you get back won’t just be the obvious ones like “Nike vs Adidas marathon shoes” – it will also include niche variations such as “marathon shoe cushioning for heavier runners” or “carbon plate sneakers for long-distance races.” These are exactly the kinds of synthetic subqueries AI systems invent to cover intent more fully.

Next, break those predictions down into entities: brands, materials, performance traits, and test them in live search. If your content surfaces in AI Overviews, you’re already aligned. If not, you’ve just found new opportunities to create or refine content.

GEO takeaway: Don’t wait for fan-out to reveal itself. By combining keyword data with AI-predicted expansions, you can get ahead of the curve and publish content that’s ready for the questions search engines haven’t asked yet.

Why Entities Matter More Than Keywords

In generative search, exact wording isn’t what holds the most weight – entities are. They’re the building blocks AI systems use to organize knowledge. That means two very different searches can actually connect to the same entities and end up pulling from the same retrieval branch.

Take running as an example. Someone might search for “best shoes for the Boston Marathon 2025,” while another person types “long-distance sneakers with carbon plates.” The words don’t match, but both link back to entities like [Marathon running], [Carbon plate shoes], and [Performance footwear]. If your content is connected to those entities, it’s eligible to appear for both queries, including synthetic ones the system generates on its own.

That’s why entity mapping is so important. It shows you what the AI actually understood, not just what the user typed.

GEO takeaway: Anchor your GEO strategy around entities rather than just keywords. Aligning content with the right entities expands your eligibility across a wide range of searches, even ones with little or no visible search volume.

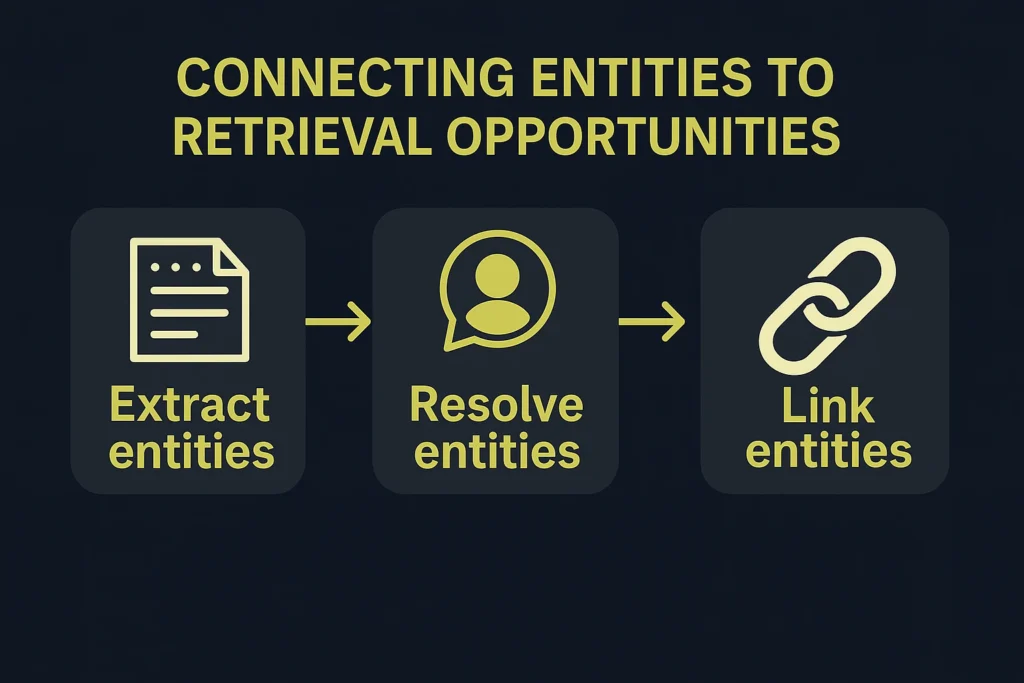

Connecting Entities to Retrieval Opportunities

Knowing that entities guide AI retrieval is one thing, but mapping them is what makes that knowledge actionable. The process usually involves three layers:

- Extract entities: Pull out the key entities from both the queries you care about and the content you’ve already published.

- Resolve entities: Match those surface forms to a canonical source, such as a Knowledge Graph ID, so you know exactly what concept the system is connecting.

- Link entities: Reinforce those connections inside your own content with schema markup, internal linking, and clear contextual mentions.

Let’s go back to the query “best running shoes for marathons 2025.” Entity extraction might surface [Marathon running], [Carbon plate technology], [Nike], and [Cushioning systems]. When you place those entities onto your fan-out map, you’ll notice clusters – one branch might be tied to carbon plates, another to marathon-specific footwear, and another to brand comparisons.

From there, the question is simple: are your pages strongly connected to those entities, or do gaps remain? Without those ties, your content is less likely to appear in AI Overviews, no matter how well you’ve optimized keywords.

GEO takeaway: Use an entity–query map to zero in on the concepts that unlock retrieval eligibility. Strengthening those links gives your content a better shot at appearing where it counts.

Bringing Queries and Entities Together

Looking at queries on their own tells part of the story. Mapping entities gives you another view. But the real clarity comes when you put them together.

Imagine you’re tracking the query “best running shoes for marathons 2025” along with dozens of variations. Each time an AI Overview appears, you log three things:

- The triggering query (whether it’s the original or a variation)

- The entities that surfaced in that retrieval branch (like [Marathon running], [Carbon plate shoes], [Nike])

- The group or “cluster” that the citation seems to belong to

Over time, this combined dataset shows you what’s actually driving visibility. You’ll see which variations influence retrieval most, which entities consistently anchor results, and where your content either shows up or goes missing.

That integrated map becomes your control panel. Before publishing new content, you can check:

- Does it cover the high-value entities?

- Is it aligned with the subqueries that tend to generate citations?

- Have you added internal links that strengthen those entity ties?

GEO takeaway: Merging query and entity tracking turns scattered data into a living map. It gives you a clear framework to guide new content decisions based on how AI systems actually retrieve and rank information.

Automating the Attribution Process

Manually testing queries is a great way to learn, but it quickly becomes unmanageable once you scale up. To keep pace with how AI systems evolve, you need automation.

Picture building an agent that handles the heavy lifting for you:

- Pulls your seed queries from a database (like “best running shoes for marathons 2025”).

- Generates controlled variations and predicted expansions.

- Runs them in search and captures any AI Overviews that appear.

- Extracts the cited URLs and the passages around them.

- Tags both the queries and the cited content with entities.

- Stores everything in a graph database so you can analyze it over time.

Set this to run weekly, and you’ll start to see patterns shift in near real time. Maybe a new cluster emerges around “trail marathon shoes” or a fresh entity like [Recycled materials] becomes more prominent. With automation, you can catch those changes as they happen instead of months later.

GEO takeaway: Automation transforms attribution from a one-off exercise into a living system. It helps you keep up with changing AI retrieval patterns and stay aligned with the entities that are gaining influence.

Looking Ahead: The Next Phase of Fan-Out and Entities

AI search isn’t standing still. Retrieval is moving beyond simple fan-out into multi-step reasoning, where one query triggers another, and the second depends on the first. In practice, that means the system might first identify an entity like [Marathon running], then branch into connected ones such as [Injury prevention], [Carbon plate shoes], or [Shoe durability]. These “bridge entities” link clusters together and often grow more influential over time.

For our running shoes example, it’s not just about “best marathon shoes 2025.” Future fan-out may include steps like “marathon recovery tips” or “impact of cushioning on injury rates,” pulling in secondary entities that shape the retrieval path. To stay visible, your content will need to anticipate and cover those linked ideas too.

GEO takeaway: Start paying attention to the secondary and bridge entities in your space. They’re likely to become the connective tissue of tomorrow’s AI-driven retrieval.

Final Thoughts

Attribution in the generative era calls for a different mindset. Keywords still have value, but the real drivers of retrieval are the hidden query variations and the entities that anchor them. By experimenting with fan-out, mapping entities, and combining both into a single framework, you can build a clearer picture of how AI systems decide which content to cite.

For anyone competing in the “running shoes” market, or any other space, the approach is the same. You need to learn how to spot the shadows of fan-out through testing, strengthen your connections to the right entities, and rely on automation to stay current. Done consistently, this shifts you from reacting to AI systems to actively shaping how they retrieve and present your brand. That’s the essence of Generative Engine Optimization.

FAQ

Query fan-out is the process where a single user query branches into multiple subqueries behind the scenes. For example, someone searching “best running shoes for marathons 2025” may trigger subqueries like “carbon plate sneakers for long-distance races” or “lightweight marathon shoe reviews.” The system uses these variations to build a more complete answer.

You can’t see them directly, but you can infer them by testing. Start with a base query and make small changes: swap a brand, change the year, or use synonyms, then note which sources are consistently cited. Those recurring results point to hidden subqueries that are driving retrieval.

You’ll need to extract entities from your queries and your pages, resolve them to canonical IDs (like Knowledge Graph entries), and then link them with schema markup, internal links, and contextual mentions. This ensures AI systems clearly connect your content to the right entities.

Yes. You can build an automated system that pulls seed queries, generates variations, captures AI Overviews, extracts entities, and logs all of it in a database. Running this regularly helps you track shifts in retrieval patterns without doing everything manually.

Fan-out is evolving into multi-step reasoning. Instead of a flat list of subqueries, the system may chain queries together, where one depends on the answer to the last. Bridge entities, concepts that connect clusters together, will become even more important in this type of retrieval.