Search isn’t just about blue links anymore. AI-driven platforms like Google’s AI Overviews, ChatGPT, and Perplexity are reshaping how people discover information. The real challenge now is making sure your content shows up in those answers.

This guide breaks down what AI systems look for: from technical accessibility to clarity of language, and how you can adapt. By the end, you’ll know the key steps to make your content easy for machines to interpret and strong enough to be reused in AI-generated results.

GEO Visibility Checklist – Technical Access plus Content Relevance

If crawlers cannot reach or understand your content, it will not appear. AI systems depend on structured, machine-readable inputs to decide what to use and what to ignore.

Technical Must-Haves

- Clean HTML and clear hierarchy: Use proper h1, h2, h3, plus ordered and unordered lists to establish structure.

- Robots.txt that actually allows access: Do not block key sections for search or AI crawlers by accident.

- XML sitemaps for coverage: Help crawlers discover deep URLs and new pages quickly.

- HTML sitemap for users and internal links: Improves discovery and reinforces topical relationships.

- Skip experimental files that add no value: Industry ideas like llms.txt are not standard. Prioritize proven accessibility.

Content Signals That Help Models Include You

- One topic per page: Keep focus tight to avoid mixed signals.

- Descriptive headings: Summarize what follows so the model assigns the right context.

- Answer-first formatting: Short paragraphs, bullets, and direct statements raise the odds of being quoted.

- Credible citations: Link to trusted sources, include expert input, and reference proprietary findings where possible.

Bottom line: Technical clarity plus topical relevance gives you the best shot at being selected for AI-generated answers.

Content That Actually Resonates

Technical fixes get you discovered, but they don’t guarantee you’ll be chosen. AI systems are built to surface content that not only reads clearly but also connects with people. With the sheer volume of material online, bland copy is easy to ignore.

A simple way to stress-test your approach is with the R.E.A.L. framework. It turns abstract advice into practical checkpoints:

- Resonant: Speak to the audience’s real needs, pain points, or aspirations so the content feels relevant.

- Experiential: Add elements that invite participation or interaction, from tools to visual walk-throughs.

- Actionable: Offer clear next steps, practical advice, or a framework readers can apply right away.

- Leveraged: Distribute content across multiple formats and platforms to get more life from the same idea.

The mix of accessibility and resonance is what makes content worth quoting. For GEO, that combination is your edge – it ensures both the machines and the humans see value.

Why Specifics Win

Generative engines prioritize content that feels concrete and verifiable. Broad claims or vague phrasing rarely make the cut, because models need information they can extract and cross-check. The more detail you provide, the stronger your chances of being cited.

That’s why it pays to be precise. Instead of saying “a large percentage of users improved,” it’s stronger to write “seven out of ten users saw faster results.” Rather than describing an event as happening “recently,” anchor it with an exact time marker such as “in September 2025.” When you present data, make it easy to lift – a short, standalone sentence is often more effective than tucking numbers inside a long block of text. And whenever you mention research or statistics, point to the original source so the information carries more weight.

Specifics turn your content into something that can be validated. That’s the kind of material generative platforms are built to highlight.

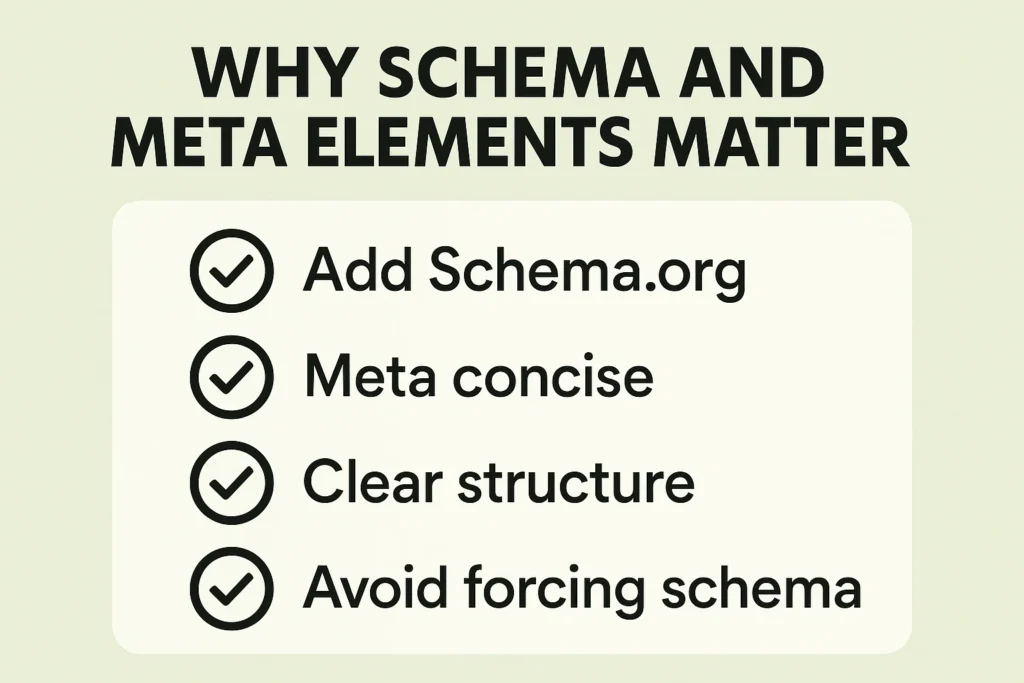

Why Schema and Meta Elements Matter

AI search doesn’t just scan for keywords. It depends on signals that explain what a page means, how its parts connect, and whether the content is trustworthy. Structured data and meta elements are the tools that provide this clarity, acting like signposts that machines can follow.

Schema markup is especially powerful. Labeling FAQs, reviews, or product details makes it far easier for AI systems to extract useful information. But the real value comes when you go beyond minimum compliance and fully define entities, attributes, and relationships. The more complete the markup, the better the context.

Meta descriptions also carry weight. They may not influence rankings directly, but they shape how your page appears in search results and how AI models summarize your work. A tight, accurate description makes your content easier to interpret.

Headings are another overlooked piece of the puzzle. A clean hierarchy with h1, h2, and h3 tags gives both readers and machines a clear structure. Misused or irrelevant markup, on the other hand, often gets ignored.

Key Actions to Prioritize

- Add Schema.org markup for FAQs, How-To guides, products, or reviews where relevant.

- Keep meta descriptions concise and aligned with page content.

- Use a clear heading structure to separate and organize ideas.

- Avoid forcing schema onto elements that don’t need it.

Think of structured data as a roadmap. The clearer and more accurate it is, the greater the chance your content has of being chosen for AI-generated results.

The Power of User-Generated Content

AI systems are increasingly pulling from places that don’t look like traditional content hubs. Instead of polished brand blogs, we often see Reddit threads, Quora answers, or even YouTube comments surface in AI summaries. The reason is simple: these sources reflect how people actually use products, solve problems, and share advice in their own words. That authenticity is hard to fake, and generative engines treat it as a strong quality signal.

If your strategy ignores community-driven content, you may be leaving visibility on the table. For many search types, user insights are not just a supplement – they are the main event.

How to Make the Most of UGC in Practice

- Know the types of queries where it shines: AI tends to lean on community input for things like troubleshooting, side-by-side product comparisons, quick tips, and open-ended “what’s best” questions. These are moments where lived experience beats polished copy.

- Shape contributions on your own platforms: If you manage forums, reviews, or Q&A sections, guide contributors toward clearer, more detailed answers. Encourage full sentences, context-rich examples (“I tested this on an M1 MacBook with the latest OS” rather than “it didn’t work”), and logical formatting that breaks up long explanations.

- Support discovery with schema: Structured markup for reviews, Q&A, or discussion posts helps search engines recognize user input and makes it easier for AI systems to include those insights in generated answers.

- Prioritize usefulness over polish: Community content doesn’t need to sound like marketing material. What matters most is whether it helps someone solve a problem. AI models pick up on signals like whether the response gives a clear fix, whether others found it helpful, and whether it triggered engagement through replies or upvotes.

- Pay attention to what AI is surfacing: Keep track of how your audience’s conversations show up in places like AI Overviews or Perplexity summaries. Spotting which community insights get quoted can inform how you nurture and structure your own user-driven content.

In many cases, rough but useful answers outcompete perfectly styled content. That’s the shift AI search is driving, and it’s a reminder that building visibility isn’t only about what you publish, but also about the communities where your audience is already speaking.

How We Approach AI Search at NUOPTIMA

At Nuoptima, we’ve seen firsthand how fast the landscape is shifting. Traditional SEO tactics alone aren’t enough anymore – visibility now depends on how well your content is understood and reused by AI systems. That’s why our work focuses on bridging technical SEO with what we call Relevance Engineering: making sure content is structured, accessible, and strong enough to be pulled into AI-driven answers.

We help businesses move beyond chasing keywords. Our approach covers the foundations: from site architecture and schema to high-quality link acquisition, while also building content designed to resonate in AI summaries. For global companies, we handle international SEO, ensuring the right version of a page is surfaced in the right market. And for teams looking to scale, we combine data-driven insights with content strategies that perform across Google, ChatGPT, Perplexity, and beyond.

Here’s what we focus on:

- Search presence that converts: Making rankings translate directly into measurable revenue.

- Content built for AI discovery: Readable, verifiable, and structured so it gets quoted.

- Scalable strategies: From technical fixes to link-building campaigns that stand the test of time.

- Cross-market growth: Optimizing for multiple languages and regions without losing consistency.

Our goal is simple: to help you own your share of visibility in the new search ecosystem. Because if your brand isn’t being cited, quoted, or referenced in AI results, it’s missing from the conversations that matter most.

Writing So Machines Understand

Keyword density isn’t what drives visibility in AI search anymore. What matters is how clearly your content communicates meaning. Generative engines rely on embeddings – mathematical models that capture relationships between words, phrases, and concepts. If your writing is vague, it’s harder for these systems to place your content correctly. If it’s precise and consistent, you make it easy for them to understand and reuse.

That starts with naming things directly. Instead of saying “the platform,” call it “Ahrefs” or “Semrush.” Rather than writing “this approach,” specify “the cluster content model” or “the topic hub strategy.” Use the same terminology throughout so the model doesn’t have to decide whether “customer acquisition cost” and “CAC” mean the same thing in your text. Small descriptors also help sharpen meaning. For example, “cloud-based CRM software” conveys far more than just “software.”

Avoid language that only makes sense in context. A phrase like “this worked better” is unhelpful when lifted out of its original paragraph. Writing “the new crawl budget method reduced indexation issues” keeps the subject clear and extractable.

In short, think of each sentence as something that could be read in isolation. The more self-contained and unambiguous your language, the more likely it is that AI systems will embed, interpret, and ultimately showcase your content.

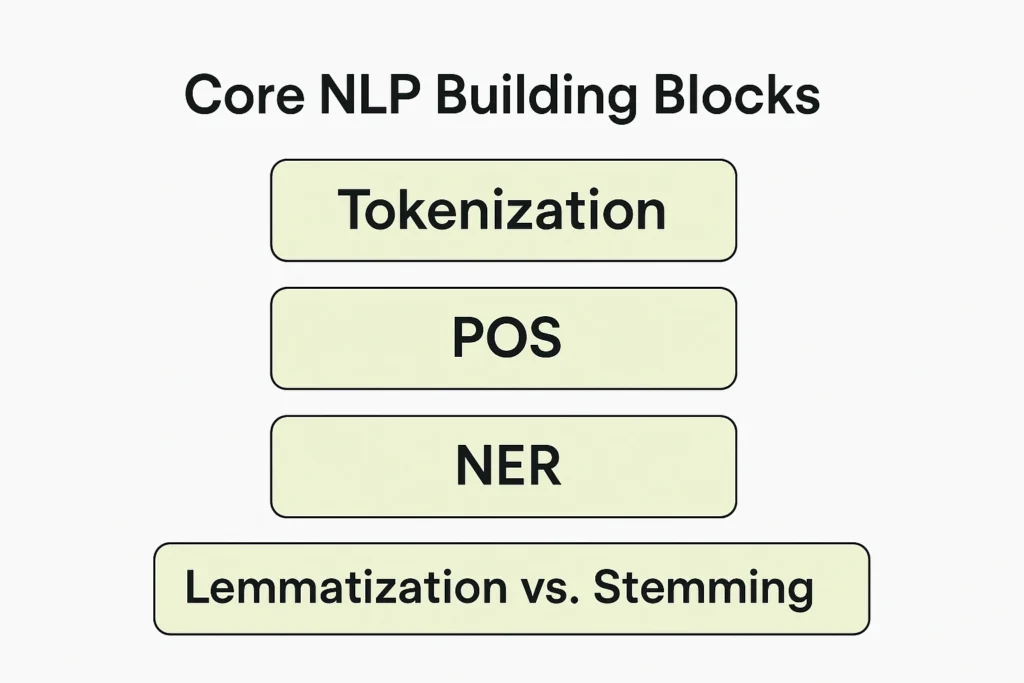

Core NLP Building Blocks

Tokenization

Tokenization is the first step in most natural language processing workflows. It’s about breaking text into smaller chunks, called tokens. that a system can work with. Depending on the task, tokens might be full words, pieces of words, or even single characters. By doing this, models can analyze text more effectively, calculate frequencies, or prepare inputs for training and prediction.

It’s not just about analysis, though. Tokenization also plays a role in processing large datasets and protecting sensitive information by splitting it into less recognizable pieces.

Example: The sentence “AI tools are changing digital marketing.” could be split into tokens like [“AI”, “tools”, “are”, “changing”, “digital”, “marketing”, “.”].

Part-of-Speech Tagging (POS)

POS tagging assigns a grammatical role to every token in a sentence. It tells the system whether a word is a noun, verb, adjective, or another part of speech. This helps models understand sentence structure and improves tasks like parsing, entity recognition, and information extraction.

It’s especially useful when words can carry more than one meaning. Identifying the correct part of speech reduces ambiguity and gives search engines and AI systems a clearer picture of what’s being said.

Example: In “Creating tutorials improves audience engagement,” POS tagging would label “Creating” as a verb, “tutorials” as a noun, “improves” as a verb, “audience” as a noun, and “engagement” as a noun.

Named Entity Recognition (NER)

NER focuses on finding and classifying entities in text – things like company names, locations, people, or dates. This is a critical step for building knowledge graphs, categorizing content, and making sense of documents at scale.

NER is widely used across industries, from analyzing financial reports to pulling patient data in healthcare systems. It helps AI models understand which specific “things” are being talked about, not just the words themselves.

Example: In the sentence “Amazon launched a new data center in Mumbai,” NER would mark “Amazon” as an organization and “Mumbai” as a location.

Lemmatization vs. Stemming

Both lemmatization and stemming are ways of reducing words to a simpler form, but they do it differently. Stemming chops off endings to create a root, even if the result isn’t a real word. Lemmatization is more precise: it reduces words to their base dictionary form while considering their meaning.

Because stemming is blunt, it sometimes produces forms that aren’t actual words. Lemmatization, on the other hand, keeps results meaningful, which makes it more accurate for semantic tasks like SEO and AI search.

Example: From the phrase “analysts were predicting stronger results,” stemming might return [“analyst”, “were”, “predict”, “strong”, “result”], while lemmatization would give [“analyst”, “be”, “predict”, “strong”, “result”].

How AI Breaks Down and Interprets Language

Parsing Sentences Like a Map

Dependency parsing looks at how words connect to each other in a sentence. Instead of just knowing whether a word is a noun or a verb, the system builds a tree that shows which word is the “main” one and which words depend on it. This structure is key for machines to understand meaning at a deeper level.

For instance, in the sentence “The new algorithm boosts search performance,” parsing reveals that “algorithm” is the subject, “boosts” is the main verb, and “performance” is the object. Modifiers like “new” and “search” attach to their respective words, making the full relationship map clear.

Figuring Out Who’s Who in Text

Language often refers back to things without repeating them. Coreference resolution is the process of figuring out when different words or phrases point to the same entity. Without this step, a model might treat “she,” “the manager,” and “Anna” as three separate people when they’re actually one.

Take the example: “Anna presented the report. She explained the results in detail.” A system with coreference resolution can recognize that “Anna” and “She” both refer to the same person, keeping the meaning consistent.

Highlighting the Core Ideas

Keyword extraction helps machines identify the most important terms in a block of text. Instead of weighing every word equally, the system highlights the ones that define the main topic or purpose. This can be done with statistical methods like TF-IDF or graph-based approaches such as TextRank.

For example, in an article about “emerging trends in e-commerce,” a keyword extraction tool might highlight terms like “e-commerce,” “digital payments,” “customer experience,” and “supply chain.” This gives a snapshot of the core themes without reading the whole piece.

Clustering Words Into Bigger Themes

While keyword extraction focuses on individual words, topic modeling looks for broader themes by clustering words that frequently appear together. This helps group content into categories and uncover underlying subjects that might not be obvious at first glance.

For example, analyzing a batch of marketing blogs might reveal clusters such as “SEO strategies,” “social media campaigns,” and “conversion optimization.” Even if those exact phrases weren’t used, the model can still detect the themes from word patterns.

Understanding Tone: Sentiment Analysis

Sentiment analysis, sometimes called opinion mining, looks at the mood behind a piece of text. It sorts writing into categories like positive, negative, or neutral, helping machines grasp not just what is being said but how it is being expressed.

This is widely used in marketing and SEO to track customer sentiment in reviews, social media posts, or competitor content. For AI-driven search, sentiment can influence how results are ranked or even which snippets get highlighted in personalized feeds.

Example:

- “The interface is smooth and intuitive” → Positive

- “Support was slow and unhelpful” → Negative

- “The blog explains the topic” → Neutral

Summarizing Long Content

Summarization condenses lengthy writing into something shorter and easier to digest. There are two common approaches. Extractive summarization lifts key sentences or phrases directly from the original text. Abstractive summarization, on the other hand, rewrites information in new words, often creating more natural and readable summaries.

This ability is critical for AI features like Google’s AI Overviews, article previews, or automatic meta descriptions. By shrinking content into usable chunks, summarization ensures that even long-form writing can show up in concise, AI-generated results.

Example: From an article on “the future of renewable energy,” an extractive summary might highlight a few direct sentences about solar and wind adoption, while an abstractive version could produce a short statement like: “Renewable energy growth is being led by advances in solar and wind technology.”

Linking Names to Meaning

Entity linking goes beyond spotting names in text. It matches them to the correct identity in a knowledge base. That way, “Amazon” in a sentence about shopping is tied to the company, while “Amazon” in a geography article connects to the rainforest.

This process is essential for semantic search, which depends on knowing exactly which entity is being discussed. By linking words to their precise reference, AI systems can deliver more accurate and context-aware results.

Example:

- “The Tesla Model Y outsold other EVs in Europe” → “Tesla” is linked to the company.

- “She visited Tesla in Croatia” → “Tesla” is linked to Nikola Tesla, the person.

Classifying Text by Purpose

Text classification assigns categories or labels to chunks of writing. It’s the engine behind spam detection, topic grouping, and intent recognition. By doing this at scale, AI systems can filter, organize, and retrieve relevant information much more effectively.

In SEO and AI search, classification helps separate high-value content from low-quality text, understand the intent behind queries, and recommend better results.

Example:

- “Win a free iPhone now!” → Spam

- “Quarterly earnings show strong growth in healthcare” → Business/Finance

- “Best tips for faster website loading” → Technology/How-to

Word Embeddings: Mapping Meaning in Space

Word embeddings turn words into mathematical vectors that capture meaning and context. Instead of relying on exact matches, embeddings place words in a multi-dimensional space where related terms sit close together.

Modern systems, like Gemini embeddings, make this even more powerful by producing context-aware vectors across multiple languages. These representations support everything from similarity search to clustering and ranking.

Example:

The words “teacher,” “student,” and “school” would cluster near each other in vector space, while “volcano” would sit far away. With vector arithmetic, the model can even reason out analogies, such as “king – man + woman ≈ queen.”

Document-Level Embeddings

While word embeddings handle single terms, document embeddings capture the meaning of entire sentences or paragraphs. This allows AI systems to compare long pieces of text and understand how closely related they are.

Techniques like Doc2Vec, Sentence-BERT, and the Universal Sentence Encoder provide these representations, giving search engines the ability to measure similarity across full articles.

Example: An article about “solar panel adoption in Asia” would sit close to one about “renewable energy investment in China” but far from a piece about “ancient Greek philosophy.”

Detecting Copying: Plagiarism Checks

Plagiarism detection uses embeddings to go beyond simple word matching. By analyzing semantic similarity, these systems can spot copied passages even if the wording has been slightly rephrased. This is especially important for content teams that need to protect originality and avoid search penalties.

Example:

If two blog posts phrase the same research findings differently, plagiarism tools can still flag them as near-duplicates based on meaning, not just identical wording.

Spotting the Unusual: Anomaly Detection

Anomaly detection is about finding patterns that don’t fit. In content and SEO, this can reveal sudden drops in readability, odd keyword usage, or reviews that look suspicious.

By highlighting these outliers, teams can catch quality issues before they hurt performance.

Example: If ten reviews of a product are neutral or positive but one suddenly uses extreme language, an anomaly detection system would flag it as worth checking.

Measuring Ease of Reading

Readability scoring estimates how simple or complex a text is for the average reader. Factors like word length, sentence structure, and syllable counts go into the calculation.

For SEO, readability affects user experience, bounce rates, and even how likely content is to be used in AI-generated overviews. Common formulas include Flesch-Kincaid, Gunning Fog, and SMOG.

Example:

A legal contract might score very low (hard to read), while a children’s storybook would score very high (easy to read).

Semantic Search: Meaning Over Keywords

Semantic search goes beyond looking for exact word matches. Instead, it understands the intent and meaning behind a query, then finds documents that align with it. This approach is powered by embeddings and is the backbone of today’s AI-driven search platforms.

Example: If someone searches for “eco-friendly power options,” semantic search can return results about solar, wind, or renewable energy without needing the exact phrase “eco-friendly power options” in the document.

Wrapping It Up

AI search is rewriting the rules for visibility. Where traditional SEO focused on keywords and links, today’s systems demand structure, clarity, and credibility. Models pull from content that is specific, technically accessible, and easy to reuse: whether it’s a polished guide, a well-marked FAQ, or even an authentic forum discussion.

For businesses, the takeaway is straightforward: engineer your content for relevance. That means tightening technical foundations, writing with semantic precision, and giving AI the details it needs to verify and reuse your work. It also means recognizing that community voices and practical, real-world insights often matter just as much as brand messaging.

The brands that adapt to this shift won’t just show up more often in AI-generated answers – they’ll shape the conversations that customers actually see. And in an era where search is less about links and more about answers, that visibility is where influence begins.

FAQ

AI search doesn’t just return links, it generates answers. Systems like Google’s AI Overviews, ChatGPT, and Perplexity build responses by pulling from multiple sources. That means your content needs to be written, structured, and marked up so machines can easily understand and reuse it.

Start with accessibility. If crawlers can’t reach your content or parse it properly, it won’t show up. Clean HTML, working sitemaps, and open robots.txt rules are the foundation before you think about content strategy.

Not in the old sense. Keywords are still useful, but clarity, precision, and entity-based writing carry more weight. Instead of stuffing terms, use consistent language, name entities directly (e.g., “Google Analytics” instead of “this tool”), and keep passages self-contained.

Schema markup and meta signals help AI models understand what your page is about. Well-labeled FAQs, reviews, or products are easier to extract. The more context you give machines, the better chance your content has of being cited in AI summaries.

There’s no fixed timeline. If your site is crawlable and trusted, updates can appear within days. For new domains or lower-authority sites, it may take weeks or even months. Regular monitoring helps you see when content starts being cited.