Search isn’t as simple as matching words anymore. A single query can branch into a dozen different directions, pulling in planting calendars, tool lists, or soil tips if you’re asking about gardening – or just as many side angles in any other topic. Generative systems gather all of those fragments, weigh them, and weave them into a final answer.

For content creators, that means the old rules don’t apply. It’s not enough to target a keyword and hope for clicks. You have to think about intent clusters, formats, and whether your information can stand alone if pulled into an AI-generated response. This shift is reshaping what it takes to stay visible, and the details of query fan-out show exactly why.

How Queries Work in the Age of Generative Search

Back when search engines relied on strict ranking formulas, the query was the core of the whole process. You typed a phrase, and the engine tried to match it word for word against its index. Pages that used the same terms in important spots like headings or metadata usually floated to the top. The query itself was fixed – what you wrote was exactly what the system used to decide which results to show.

That design put keywords first and documents second. SEO strategies followed the same logic: choose a set of target phrases, build your content around them, and do everything possible to signal that your page was the best match.

Generative search has broken that pattern. Now, the words you type are more like a rough starting point. Instead of sticking to your original phrase, the system explodes it into many different versions. It spins off alternate wordings, fills in missing details, adds related questions, and sends each variant to different places to collect material. What comes back is not a ranked list but a mix of snippets and chunks that get re-scored, filtered, and stitched into a single response.

The implications are massive. Simply matching a user’s wording is no longer enough. The real contest happens beneath the surface, at the level of sub-queries and intent fragments. Your content has to be relevant not only to the literal phrase but also to the wider set of angles the system generates on its own.

Take the example of someone searching “best half marathon training plan for beginners.” In the old setup, the engine looked for pages that matched that phrase closely. In the generative setup, that single line branches into a web of directions, such as:

- Schedules for different training lengths

- Lists of recommended gear

- Advice on avoiding common injuries

- Nutrition tips during training

- Strategies for pacing a race

- Recovery guides for after the event

What the system wants isn’t one page that checks every box – it wants a collection of evidence from many angles. The original query is just the seed; the expansion is where the real action happens.

What We Do at Nuoptima to Keep You Ahead

At Nuoptima, we live and breathe the changes happening in search. Generative systems don’t just rank links – they expand queries, route them across formats, and piece together answers. That means brands can’t rely on old keyword playbooks anymore. To stay visible, content has to be structured, multi-format, and ready to be pulled into AI-driven results.

This is where we step in. We focus on building strategies that don’t just chase rankings but make your business part of the actual answers users see. Our approach blends data-driven insight with creative execution, so your content survives expansion, routing, and selection.

Here’s how we make that happen:

- Full-spectrum SEO and GEO: From technical fixes to strategic content hubs designed for generative search.

- Link building that works: High-quality, authoritative backlinks that improve trust and retrieval likelihood.

- Content built for extraction: Structured guides, tables, and multimedia formats that slot naturally into AI-generated answers.

- Local and international SEO: Strategies tailored for specific regions, languages, and markets.

- Paid and organic synergy: Aligning Google Ads, social campaigns, and SEO so growth comes from multiple channels.

Our goal is simple: to ensure that when search engines expand a query into dozens of branches, your brand shows up in more than one place. Because visibility in the generative era isn’t just about being found – it’s about being chosen.

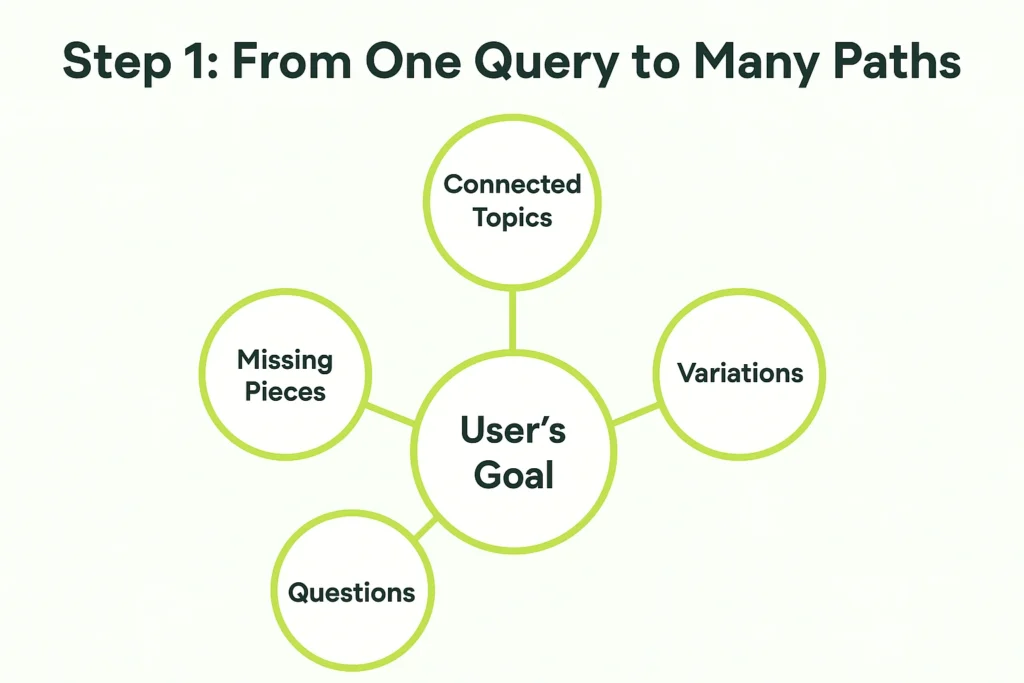

Step 1: From One Query to Many Paths

When a person types a query, modern search systems don’t just take those words at face value. Instead, they treat it as a rough signal – a starting point that hides multiple layers of meaning. Older search engines tried to broaden queries using tricks like synonyms or word stems, but today’s approach is much more advanced. Powered by large language models, embeddings, and behavioral patterns, the system now generates an expanded web of questions designed to capture both the obvious request and the hidden needs behind it.

Figuring Out the User’s Goal

Take the example of someone searching for “best half marathon training plan for beginners.” The system first categorizes the intent: it’s an informational query, squarely in the running and fitness world, with the task of finding a structured guide. The word “best” signals a comparative element, while the beginner focus introduces a safety angle – things like injury prevention and pacing advice. This early framing sets the guardrails for what types of sources will matter.

Spotting the Missing Pieces

After classifying, the system looks for “slots,” or variables that need to be filled to build a useful answer. Some are spelled out directly: “half marathon” defines distance, “beginners” defines audience. Others are implied, like the time available before race day, current fitness levels, or whether the runner’s goal is to simply finish or aim for a personal record. Even if not every slot is answered immediately, outlining them guides the search toward content that can provide those missing details.

Mapping Out Connected Topics

Once the system has sketched out the slots, it projects the query into a vector space to find nearby ideas. For instance, let’s say the original query is: “how to start a vegetable garden at home.” Instead of stopping there, the model surfaces related concepts such as:

- Beginner-friendly gardening tips

- Planting calendars organized by season

- Soil types and preparation methods

- Essential tools for small-scale gardening

- Watering and fertilization guides

- Common pest prevention strategies

These connections aren’t random. They emerge from patterns the system has observed across millions of searches and user behaviors. If people who type “how to start a vegetable garden” often click on soil guides or seasonal planting charts, those links are recorded. Knowledge graphs also help, connecting terms like “vegetable garden” to entities such as “composting,” “raised beds,” and “crop rotation.” The result is a map of adjacent topics the system can pursue to create a more complete answer.

Generating Variations

From there, the system rewrites the original query in new forms. It might get more specific, like “vegetable gardening for small balconies” or “beginner-friendly soil preparation for vegetable gardens.” It could also change the format to “step-by-step guide for planting vegetables at home.” These rewrites ensure that content phrased differently still has a chance to show up in retrieval.

Anticipating Follow-Up Questions

Finally, the model predicts what else the user is likely to ask. Someone starting a home garden may soon wonder, “Which vegetables grow fastest?” or “How much sunlight do tomato plants need?” By preloading these sub-queries, the system can pull material it might need for a fuller synthesized answer.

By the end of this stage, the single query has grown into a small ecosystem of related questions and needs. For content creators, the takeaway is simple: if you only write for the literal phrase, you’re covering just one branch of the tree. To be consistently included in generative answers, your content has to address the wider set of related topics that systems spin out behind the scenes.

Stage 2: Routing Sub-Queries and Building the Fan-Out Map

After the system has broadened the original query into a set of related questions, the challenge changes. At this point, it’s not just about figuring out what information should be gathered – it’s about deciding where that information lives and how to retrieve it. This stage is known as routing. Each sub-query is handled almost like its own mini assignment, and the system has to pick the right sources, the right format, and the most efficient retrieval method for each one.

Matching Questions to Sources

Take our gardening example. From the query “how to start a vegetable garden at home,” the system may have spun out sub-queries such as:

- “vegetable planting calendar by season”

- “tools needed for home gardening”

- “best soil for beginner gardeners”

- “watering schedule for vegetable gardens”

- “organic pest control for small gardens”

Each of these has different content requirements. A planting calendar is often best found in tables or charts from agricultural sites. Tool lists might come from gardening blogs, product pages, or retailer guides. Soil recommendations may be sourced from horticulture experts or local extension services. Watering schedules could come from structured knowledge bases, while pest control advice might need filtering for safety and reliability, drawing from university or government resources.

Thinking in Modalities

Routing also considers how the information should be delivered. A planting calendar works well as a table. A soil preparation guide could be a detailed article with diagrams. A tool checklist might be easier to understand as a bulleted list with pictures. A watering routine could be a short video with step-by-step instructions, but since video is harder to parse, the system may start by scanning transcripts or captions. The choice of modality matters because the system favors formats that are clear, structured, and easy to integrate into an answer.

Balancing Retrieval Strategies

Not all queries are retrieved in the same way. For precise details like “pH level for vegetable garden soil,” the system may rely on sparse retrieval methods that match rare technical terms exactly. For broader ideas like “beginner vegetable gardening tips,” semantic retrieval using embeddings can surface useful content even if the wording is very different. Often, a hybrid strategy combines both approaches, giving the system a mix of exact matches and semantically similar results.

Weighing Costs and Priorities

Every retrieval attempt: whether through a web crawl, database query, or API call – consumes resources. The system weighs how important each sub-query is to the final answer. High-priority questions like “seasonal planting calendar” might get pulled from multiple sources to ensure accuracy. Lower-priority ones may only get a single pass. This cost-conscious approach keeps the pipeline efficient while still ensuring quality where it matters most.

Why Routing Matters for Visibility

For content creators, routing is where visibility can be won or lost. If your material doesn’t align with the format or source type the system expects, it may never be retrieved. That’s why publishing in multiple modalities is critical. A guide on seasonal planting could exist as an article, a structured table, and even a downloadable PDF. A soil preparation tutorial could be text with diagrams, plus a short video with captions. The more formats you cover, the more chances your content has to be matched to a branch of the fan-out tree.

Stage 3: From a Pile of Results to a Usable Answer

By the time all the branching questions have been routed and retrieved, the system is holding a mountain of material – far too much to use directly. The next step is trimming that pile down to only the pieces that can realistically be worked into a coherent reply. This is the selection stage, and it’s where relevance meets practicality.

What Makes Something Extractable

The first thing the system looks for is whether a piece of content can be pulled out cleanly. A simple chart that shows which vegetables to plant in spring, summer, and fall is easy to reuse. A three-paragraph anecdote that mixes planting advice with a personal story is harder to slice into a usable chunk. Content that’s neatly scoped and structured usually makes the cut; messy text often doesn’t.

Packing Value Into Fewer Words

Next comes density. A short, direct line like “Carrots sprout best in loose, sandy soil at 6.0–6.5 pH, according to Oregon State Extension” offers more value than a long, meandering description that eventually mentions soil types. Information that delivers clear facts with minimal noise ranks higher because it gives the model strong building blocks to work with.

Being Clear About Conditions

Clarity of scope matters too. Advice like “Lettuce grows best when planted in cool weather, ideally in early spring or late summer” sets boundaries and makes it clear when the tip applies. A vague claim such as “Lettuce grows well almost anywhere” is more likely to be filtered out, since it could mislead someone in a hot or dry climate.

Trust and Cross-Checking

Authority plays a big role. A guide from a university agriculture program or a reputable gardening organization is weighed more heavily than a hobbyist’s casual blog. The system also looks for alignment – if several trusted sources all say beans should be planted after the last frost, that consistency makes the advice safer to include.

Staying Current

While some gardening basics don’t change much, other guidance does. For example, pest-control recommendations, climate-specific planting windows, or new resistant crop varieties evolve over time. Content that shows a recent update date or cites current research is more likely to survive the selection filter.

Removing Risky Material

Safety checks also play a part. Advice that suggests harmful pesticides without proper warnings, or tips that could damage soil or crops, may be excluded even if they appear detailed. These filters are in place to protect users from unreliable or dangerous instructions.

Why Some Content Misses Out

Not every good piece of writing makes it through. A beautiful infographic locked in a PDF may never be parsed if the data isn’t accessible. A long blog post that buries the key planting chart halfway down the page might get overlooked in favor of a simpler table elsewhere. The takeaway: it’s not just about writing helpful content – it’s about presenting it in a way the system can easily lift and reuse.

GEO Insight: Preparing Content for Selection

At this point, the key shift for GEO is clear: success depends on how well the pieces of your content hold up, not just the page as a whole. Think in terms of modules, not monoliths. Every segment of information should be able to stand on its own if pulled into an AI-generated answer. To make that possible, each chunk needs to:

- Define its boundaries – who it applies to, when it applies, and under what conditions.

- Pack real value into a small space, cutting out unnecessary fluff.

- Use layouts that machines can parse quickly, like bullet lists, tables, or short blocks of text with obvious labels.

- Carry weight by being written or checked by someone credible.

- Show freshness through dates, updates, or version notes.

For most creators, this means rethinking structure. Instead of writing one endless scroll, design content as a collection of small, clearly marked units. Each unit should be extractable without confusion and useful even if it’s lifted out of context. Adding schema, metadata, or alternate views, like a text version of a video or a CSV of a chart makes it even easier for retrieval systems to pick up.

When your content is built this way, it doesn’t just answer a question – it’s ready to be reused. That readiness is what determines whether your work ends up in the final AI response or stays buried in the pile.

Pulling It All Together: From Query to Answer

Let’s walk through the path a single query might take – in this case, “how to start a vegetable garden at home.”

- The system first expands the query. It tags it as an informational request, identifies variables like soil type, available space, and planting season, and then branches into related needs: calendars, tool lists, watering routines, and pest prevention. It also generates rewrites such as “gardening tips for small balconies” and anticipates follow-ups like “Which vegetables grow the fastest indoors?” By the end, one short query has become a cluster of 15–20 connected questions.

- Next comes routing. Each sub-query is matched to the sources and formats most likely to deliver strong material. Planting calendars might be sent toward agricultural databases, tool lists toward blogs or retailers, soil advice toward horticulture experts, and pest guidance toward trusted educational or government content. The format matters too: tables, lists, and transcripts are often prioritized because they’re easy to parse and reuse.

- After retrieval, the system moves into selection. Here the goal is to filter the pile down to only the most usable chunks. A seasonal planting chart with clear rows and columns is kept. A concise fact about soil pH with a university citation is kept. But a rambling blog story that hides the advice halfway through is dropped, and unsafe pesticide suggestions are discarded outright. What remains is a smaller, higher-quality set of information pieces.

- Finally, the synthesis layer assembles the answer. The user might see an opening paragraph on the basics of home gardening, a table showing planting seasons, a short checklist of tools, a section on watering routines, and a few notes on pest control. Each of those components comes from a different branch of the fan-out, yet together they form a cohesive and practical guide.

The important point here is that every stage: expansion, routing, selection, and synthesis, presents both chances to be included and risks of being left out. A single generic guide might win one slot, but a well-structured set of planting charts, tool checklists, soil prep tips, and watering guides could appear in several parts of the final AI-generated answer.

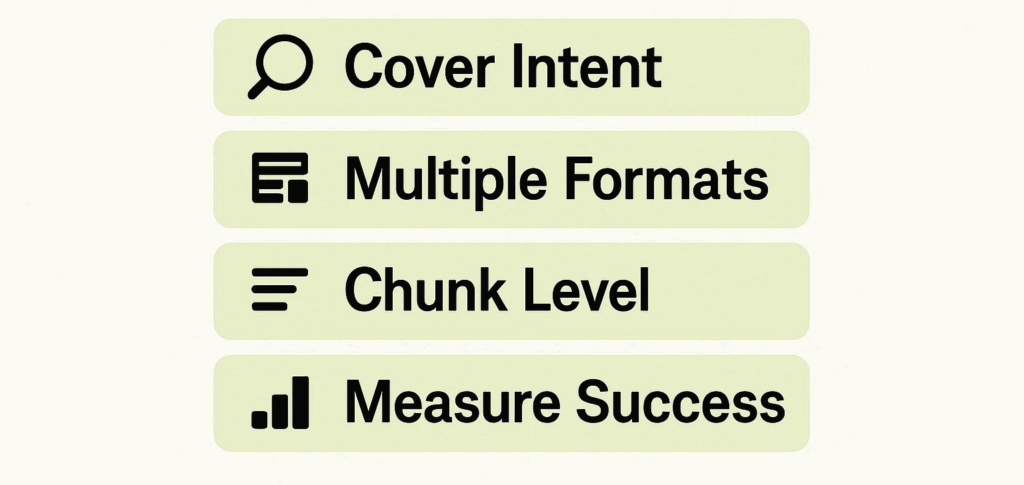

Practical Lessons for GEO in a Generative Era

Generative search isn’t a straight ranking battle for one phrase anymore. It’s a multi-stage process, and your content needs to survive at every step. Here’s what that means in practice:

1. Cover Intent, Not Just Keywords

Focusing on a single phrase like “how to start a vegetable garden at home” won’t cut it. The system is going to expand that into planting calendars, soil advice, watering tips, and pest control. If your content only answers the initial query, you risk being visible in just one branch instead of many. To stay competitive, your material has to cover the broader ecosystem of related intents.

2. Embrace Multiple Formats

Routing decisions are shaped by modality. A planting calendar isn’t always pulled as a paragraph — sometimes the system prefers a table, other times a PDF or even a short video. Likewise, a soil preparation guide can be useful as an article, but it’s even more valuable if it also exists as an infographic or structured dataset. Having your information in several forms multiplies your chances of being retrieved.

3. Optimize at the Chunk Level

Selection happens at the unit level, not the page level. A table that clearly shows seasonal planting times can be lifted directly into an answer, while a long narrative that buries the same information may never be used. Every section of your content should be designed to stand alone — scoped clearly, easy to extract, and packed with useful detail.

4. Rethink How You Measure Success

Old SEO metrics like rankings or CTR don’t reflect performance in generative systems. Instead, the real signals are: how many branches of the fan-out your content appears in, what percentage of your chunks are extractable, and how often your material gets selected in synthesis. These are the markers that show whether your work is actually surfacing in AI-generated results.

Wrapping It Up

The way search works today is no longer about one query leading to one list of results. It’s a layered process where a simple phrase like “how to start a vegetable garden at home” gets expanded into dozens of related questions, routed across different sources, filtered for usability, and finally stitched together into a single answer.

For anyone creating content, the message is clear: it’s not enough to just cover the basics. You need to think about intent clusters, offer your material in formats that machines can easily reuse, and make every section of your content strong enough to stand alone. The pages that win are the ones that anticipate expansion, survive selection, and are ready to be pulled directly into synthesis.

In short, success in generative search isn’t about chasing keywords, it’s about building content that can play a role in multiple parts of the answer. Just like a thriving garden needs variety, structure, and good upkeep, your content needs to be diverse, well-organized, and regularly refreshed if you want it to keep showing up where it matters.

FAQ

It’s the process where a search engine takes a single user query and expands it into many related questions. Instead of only looking for exact keyword matches, the system generates variations, follow-ups, and related topics, then pulls content from multiple sources to build a more complete answer.

Old-school SEO was keyword-first: you targeted specific terms, optimized for them, and hoped to rank high. Generative search flips that – it cares less about exact matches and more about whether your content can answer the wider cluster of intents the system spins out.

Because AI-driven systems need to extract usable chunks. A clean table of planting seasons, for example, is easier to lift into a response than a long paragraph that hides the same information. Lists, short sections, tables, and transcripts tend to survive the selection filters better.

It means you need to think beyond one page and one keyword. Break content into smaller modules, anticipate the questions users might not ask directly, and publish in multiple formats: text, tables, visuals, and video with transcripts. The more flexible your content, the more likely it gets included in answers.

Not exactly. Keywords still matter as signals, but they’re no longer the sole deciding factor. Think of them as the seed, the real visibility comes from how well your content matches the expanded tree of queries the system generates around that seed.