Relevance Engineering is about designing content for two audiences at once: people who want answers and AI systems that decide which answers surface. Mastering this balance requires moving past old habits, understanding how semantic models interpret text, and building pages that can stand on their own in an AI-generated response.

This article looks at what that shift means in practice. We’ll explore the elements of semantic scoring, how passage optimization works, why embeddings are central to modern content strategy, and the practical steps you can take to make your material easier for AI to find, retrieve, and present. By the end, you’ll see that Relevance Engineering isn’t an abstract theory – it’s a toolkit for making sure your content remains visible and competitive in a search landscape driven by machines.

Moving Beyond Keywords: Semantic Scoring and Passage Structure

For years, SEO revolved around a fairly simple playbook: pick a keyword, repeat it in the right spots, and watch rankings climb. That approach worked when search engines relied on counting keyword density as a proxy for relevance. But today’s systems operate on a different level. Algorithms like BERT and GPT don’t just scan for repeated terms, they analyze context, relationships between words, and the overall meaning of a passage.

This evolution changes how we think about optimization. Instead of asking “How many times should I use the keyword?” the question becomes “Does this content demonstrate a full and accurate understanding of the topic?” Search engines are getting better at detecting whether a piece of writing genuinely answers a question, covers related concepts, and provides depth.

Understanding Semantic Scoring

Semantic scoring measures how closely a piece of content aligns with the intent behind a search query. Rather than rewarding surface-level keyword matches, it assigns value to how well the content’s concepts and phrasing reflect the subject as a whole.

Think about a page on car engine repair. Old SEO logic would push you to repeat “engine repair” or “fix an engine” as many times as possible. Modern semantic models, however, look for a wider vocabulary that reflects expertise: “faulty spark plugs,” “oil leaks,” “misfires,” or “engine repair costs.” These related phrases show the content isn’t just repeating a target term but actually engaging with the subject in depth. That variety increases the semantic score and makes the content more trustworthy to both users and machines.

The Role of Passage Optimization

Semantic scoring on its own isn’t enough. To work well in AI-driven systems, content also needs to be structured for easy extraction, and that’s where passage optimization comes in.

When a user submits a query, LLMs don’t pull in entire pages. Instead, they extract specific chunks of text that directly answer the question. That means each section of your content should be able to stand alone as a clear, concise unit of meaning. The cleaner and more direct your passages are, the more likely they are to be retrieved.

Take a recipe site as an example. A page that simply lists ingredients in the middle of a long paragraph makes it harder for AI to identify and surface that information. But if the page has distinct sections titled “Ingredients,” “Step-by-step instructions,” and “Cooking time,” each becomes a ready-made passage for retrieval. AI systems can lift those snippets out and serve them as authoritative answers.

The combination of semantic scoring and passage optimization creates content that not only ranks well but is also usable by AI in real-world search scenarios. It’s a shift away from keyword hacks and toward building content that is both comprehensive and structurally clear.

From Words to Vectors: How Content Becomes Machine-Readable

At its core, Relevance Engineering is about shaping content so that machines can understand and reuse it. This is where embeddings come into play. An embedding is a numerical representation of words, phrases, or entire documents in a vector space – essentially a way for AI systems to measure how closely different concepts relate to each other.

When you publish content online, you’re not just writing for human readers. You’re also creating data points that large language models interpret, store, and retrieve. Every sentence, keyword, or entity you use becomes part of an embedding that signals meaning to a machine. If the embedding is strong, your content is more likely to surface when AI is building responses. If it’s weak or scattered, your material risks being overlooked.

This shift reframes the way we think about content strategy. It’s no longer just about producing “SEO-friendly” text. Instead, it’s about designing material that improves the quality of its embedding – making it semantically rich, logically structured, and aligned with how AI organizes knowledge.

Why This Matters

Think of embeddings as coordinates on a map. Pages that use clear, related terminology sit close together in vector space, forming clusters that search engines interpret as authority. If your site has strong content clusters on related topics, you build topical strength. On the other hand, thin content with poor semantic variety will sit isolated in that space, making it harder for AI to see it as relevant.

How We Approach Relevance at NUOPTIMA

At NUOPTIMA, we’ve built our work around the same principles that make content succeed in AI-driven search. For us, Relevance Engineering isn’t just a buzzword – it’s how we help brands get found, trusted, and chosen.

We focus on making content usable for both people and machines. That means creating pages that answer real questions, structuring information so it’s easy to extract, and ensuring every piece fits into a broader semantic cluster. Whether it’s through technical SEO, high-authority link building, or content strategies informed by AI insights, our aim is always the same: visibility that drives measurable growth.

Here’s what that looks like in practice:

- SEO with depth: We optimize not just for rankings, but for long-term revenue impact.

- Content built for AI: Every article, landing page, or guide is crafted to be clear, comprehensive, and easy for search engines and LLMs to retrieve.

- Link building that lasts: We prioritize high-quality, authoritative backlinks that strengthen trust and topical authority.

- International reach: From hreflang setups to localized strategies, we help brands expand beyond borders.

- Technical precision: We fine-tune site speed, structure, and indexing so nothing gets in the way of being surfaced in search.

We’ve seen the shift firsthand: Google’s AI Overviews, ChatGPT, and platforms like Perplexity are changing how people discover information. Our role is to make sure our clients don’t just adapt but thrive. That’s why we treat every campaign as an investment in relevance – the factor that now determines who shows up when decisions are being made.

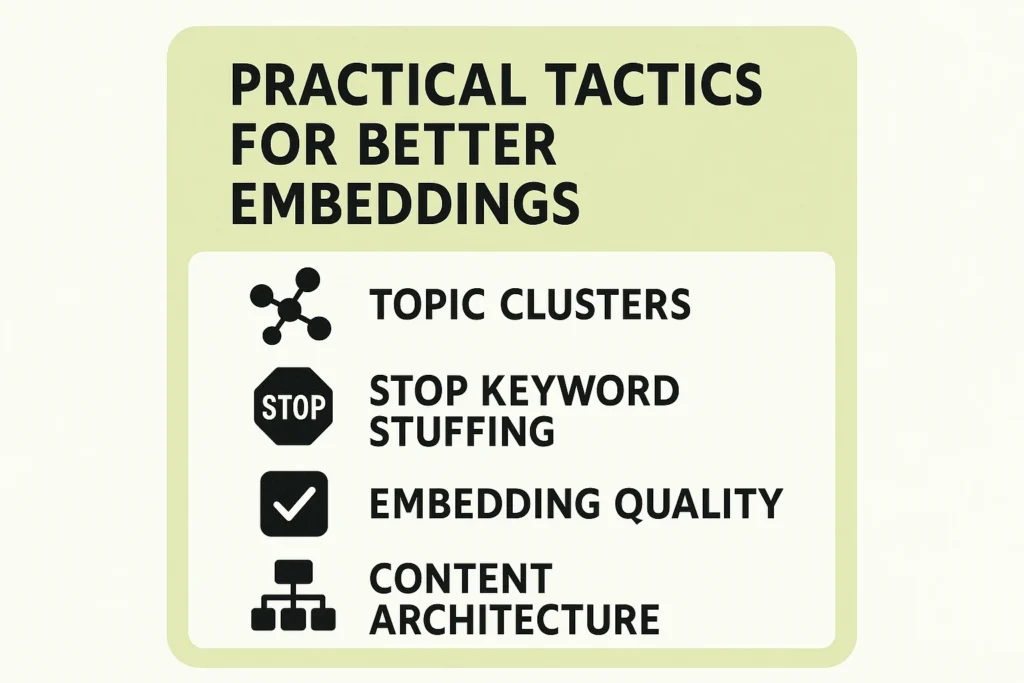

7 Practical Tactics for Better Embeddings

Improving how your content performs in AI-driven search isn’t just about writing more words – it’s about engineering material so machines can interpret it correctly. Below are seven practical ways to strengthen your content embeddings and make your pages more relevant in vector space.

1. Build Strong Topic Clusters

Search engines and LLMs recognize authority by looking at how well your content is organized around themes. If you publish one isolated article on a subject, it doesn’t carry much weight. But if you build a series of connected pages – supported by clear internal links and structured categories – you form a semantic cluster. These clusters signal depth and expertise, making your site more visible to AI systems.

2. Stop Keyword Stuffing

Repeating a keyword ten times on a page no longer improves rankings; it actually hurts credibility. Instead, focus on writing naturally. Use variations, synonyms, and related phrases that reflect how real people talk about the topic. This makes the text more engaging for readers and improves semantic scoring at the same time.

3. Focus on Embedding Quality

Embedding quality comes from more than just adding terms. It’s about shaping your content so it reflects the real relationships between concepts. That means breaking long explanations into short, self-contained passages, making sure each paragraph delivers a clear point, and weaving in relevant entities like people, organizations, or locations that strengthen context.

4. Build Solid Content Architecture

Good writing and good structure go hand in hand. AI models are more likely to retrieve passages if your content is easy to follow. Use clear paragraphs, subheadings, and logical progression from one idea to the next. Add lists and tables where they make sense. The result is content that humans find easy to read and machines find easy to extract.

5. Use Structured Data to Your Advantage

Schema markup may not change how your content reads to a visitor, but it changes how search engines interpret it. By labeling FAQs, how-to guides, product details, or reviews with structured data, you make the meaning explicit. This helps AI systems connect your content to the right queries and improves how embeddings represent it in vector space.

6. Strengthen Internal Linking

Links inside your site are signals of relevance. They show search engines how different pieces of content relate to each other. But not all links carry the same weight. Use descriptive anchor text instead of generic “click here.” Link between in-depth articles, case studies, and product pages on the same subject to build authority and help AI understand your topical map.

7. Write for User Intent First

Perhaps the most important tactic is to focus on the audience, not the algorithm. Ask yourself what a person searching this topic would want to know, and then make sure your content delivers it. Cover the main question, but also include sub-questions and related angles that give a full picture. By prioritizing user intent, you create material that naturally aligns with how AI systems evaluate relevance.

Together, these tactics help you design content that works on two levels: it’s valuable for readers and it’s optimized for the way machines process meaning. That’s the balance Relevance Engineering aims to achieve.

Making Sure Your Content Survives the AI Filter

One of the biggest shifts in content strategy today is recognizing that humans are no longer the only audience. AI systems are now gatekeepers, interpreting, reshaping, and redistributing the information you publish. Because of that, it’s no longer enough to assume your content is working just because it looks good to readers. You need a way to see it the way machines see it, and that’s where simulation comes in.

Testing embeddings through simulation lets you preview how an AI system might interpret and retrieve your content. Instead of waiting for real-world results, you can stress-test your pages against scenarios that mimic LLM behavior. This helps you identify weak spots, improve clarity, and make your material more “retrievable” before it goes live at scale.

Using Prompt Injection for Testing

Prompt injection is usually discussed in the context of security – essentially a way to trick an AI into doing something outside of its intended instructions. But marketers can adapt this concept as a diagnostic tool. By designing carefully crafted prompts, you can see how an AI system interprets your content.

For example, you might feed in a prompt that asks the model to summarize your page or extract key facts. If the result is incomplete, confusing, or misses your main points, that’s a sign your passages aren’t structured clearly enough. This kind of testing gives you insight into whether your content is optimized for machine understanding, not just human readability.

Going Deeper with Retrieval Simulation

Retrieval simulation takes the process a step further. Instead of testing outputs, it recreates the whole cycle of how LLMs search, retrieve, and assemble answers from a knowledge base. This mirrors what actually happens when AI-powered search tools decide whether your page deserves to be cited.

The process typically involves three steps:

- Build a test dataset: Write sample queries along with the ideal answers your content should deliver.

- Simulate retrieval: Use a vector database or embedding model to test whether your passages get surfaced for those queries.

- Review results: Compare what the system retrieved against what you expected. If important sections are missing or irrelevant snippets are being pulled instead, that’s a signal your embeddings need refinement.

Why It Matters

Retrieval simulation helps you understand how LLMs “see” your content – often in ways humans can’t. You may think your article is perfectly clear, but if passages aren’t properly chunked, if subtopics aren’t defined, or if internal links don’t support context, AI may skip over it. By running these simulations, you can proactively fix gaps before they cost you visibility.

In short, testing with prompt injection and retrieval simulation isn’t about gaming the system. It’s about making sure the work you’ve put into creating useful, relevant content translates into a format that AI can actually recognize and use.

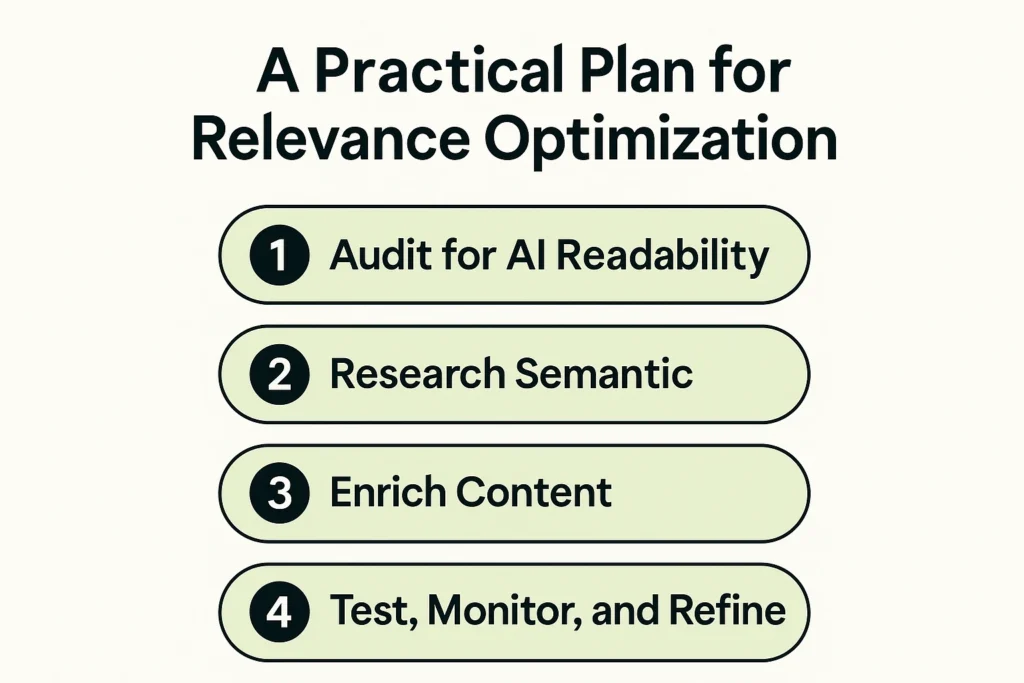

A Practical Plan for Relevance Optimization

Relevance Engineering may sound complex, but in practice, it follows a process that looks familiar to anyone who’s worked in SEO or content strategy. The difference is that instead of focusing only on keywords and backlinks, the emphasis shifts to how AI systems interpret, retrieve, and assemble information. Below is a step-by-step framework you can apply to evaluate and improve your content for an AI-driven search landscape.

Step 1: Audit for AI Readability and Extractability

Begin with a detailed review of your content. Ask:

- Are key entities and topics clearly identified?

- Can individual passages stand alone, or are they too dependent on surrounding text?

- Do sections deliver concise, factual, and accurate answers?

- Are signals of experience, expertise, authority, and trust (E-E-A-T) visible?

This first stage helps you spot weaknesses that might make your content invisible to AI-driven systems, even if it looks fine to human readers.

Step 2: Research Semantic and Latent Intent

The way people phrase queries has changed. They now ask full questions, use conversational phrasing, and often imply intent without stating it directly. To prepare for that, map out:

- The obvious questions and search queries.

- The unspoken sub-intents (for example, someone searching “best running shoes” may also want durability, injury prevention, or price comparisons).

- The related entities and concepts that round out the topic.

This ensures your content covers not only what’s explicitly asked but also the layers of meaning behind those queries.

Step 3: Structure and Enrich Content for AI

Once the research is done, restructure your content so it works at the level of semantic chunks. Break complex topics into smaller, digestible sections. Use headings, lists, and tables to make each part distinct. Keep the language clear and direct – ambiguity reduces extractability.

Adding structured data is essential here. Schema markup, entity tagging, or even building internal ontologies help machines connect your content to broader knowledge graphs. This step makes your work more machine-readable and increases its chances of being retrieved.

Step 4: Test, Monitor, and Refine

Don’t stop at publishing. Run simulations to see whether AI systems can pull your passages accurately. Monitor whether your site appears in AI Overviews or AI-generated citations. Study competitor content that gets cited and compare structures, topics, and passage clarity.

From there, refine your material. Tweak headings, improve semantic variety, or add missing subtopics. Treat this as an ongoing cycle rather than a one-off project.

Why Relevance Engineering is the New Standard

If your brand isn’t showing up in AI-driven results, it’s absent from the conversations that influence decisions. Relevance Engineering closes that gap by making sure your content isn’t just written well, but also structured and optimized for how AI retrieves information.

This approach doesn’t replace good writing – it builds on it. Content must still be engaging, accurate, and useful to people. But now, it also has to meet the technical and semantic requirements that let machines see it as relevant. Brands that adapt to this shift will own more visibility, earn more trust, and stay ahead as search continues to evolve.

Frequently Asked Questions

Relevance Engineering is the practice of designing content that works for both people and machines. Instead of just focusing on keywords, it’s about making sure your content is semantically rich, structured clearly, and easy for AI systems to pull into answers.

Because modern algorithms don’t stop at matching exact keywords. They evaluate how well your content covers a topic in context. A page with varied, related terminology usually outperforms one that just repeats the same phrase over and over.

Embeddings are numerical representations of your content in vector space. Think of them as coordinates on a map – the closer your content is to related concepts, the more visible it becomes. High-quality embeddings make it easier for AI to retrieve and rank your pages.

While often mentioned as a security concern, prompt injection can also be used as a testing tool. By feeding specific prompts into an LLM, you can see how it interprets your content and whether it captures your main points correctly.

The goal is simple: to make sure your brand shows up when AI-driven platforms deliver answers. If your content isn’t being retrieved, you’re invisible in the spaces where decisions are made. Relevance Engineering ensures you’re part of the conversation.